Chapter 15 Computations on Iteration Spaces

15.1 Introduction

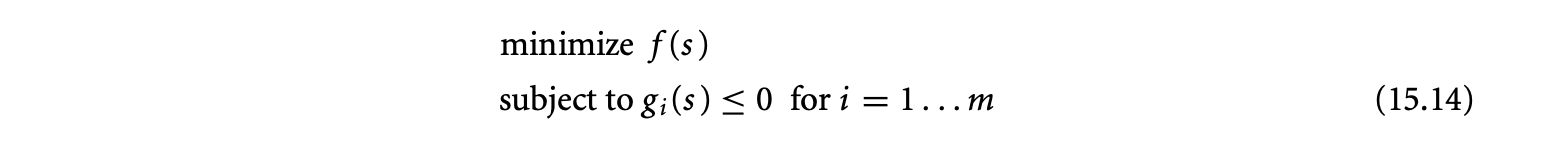

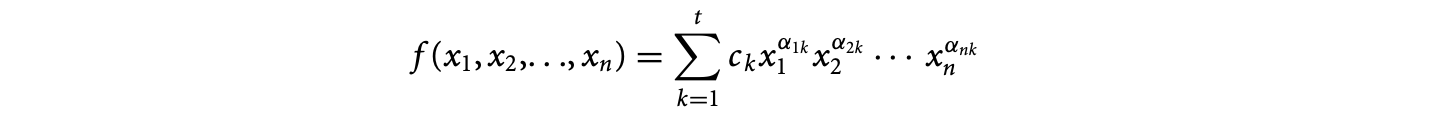

This chapter consists of two independent parts. The first deals with programs involving indexed data sets such as dense arrays and indexed computations such as loops. Our position is that high-level mathematical equations are the most natural way to express a large class of such computations, and furthermore, such equations are amenable to powerful static analyses that would enable a compiler to derive very efficient code, possibly significantly better than what a human would write. We illustrate this by describing a simple equational language and its semantic foundations and by illustrating the analyses we can perform, including one that allows the compiler to reduce the degree of the polynomial complexity of the algorithm embodied in the program.

The second part of this chapter deals with tiling, an important program reordering transformation applicable to imperative loop programs. It can be used for many different purposes. On sequential machines tiling can improve the locality of programs by exploiting reuse, so that the caches are used more effectively. On parallel machines it can also be used to improve the granularity of programs so that the communication and computation "units" are balanced.

We describe the tiling transformation, an optimization problem for selecting tile sizes, and how to generate tiled code for codes with regular or affine dependences between loop iterations. We also discuss approaches for reordering iterations, parallelizing loops, and tiling sparse computations that have irregular dependences.

15.2 The -Polyhedral Model and Some Static Analyses

It has been widely accepted that the single most important attribute of a programming language is programmer productivity. Moreover, the shift to multi-core consumer systems, with the number of cores expected to double every year, necessitates the shift to parallel programs. This emphasizes the need for productivity even further, since parallel programming is substantially harder than writing unithreadedcode. Even the field of high-end computing, typically focused exclusively on performance, is becoming concerned with the unacceptably high cost per megaflop of current high-end systems resulting from the required programming expertise. The current initiative is to increase programmability, portability, and robustness. DARPA's High Productivity Computing Systems (HPCSs) program aims to reevaluate and redesign the computing paradigms for high-performance applications from architectural models to programming abstractions.

We focus on compute- and data-intensive computations. Many data-parallel models and languages have been developed for the analysis and transformation of such computations. These models essentially abstract programs through (a) variables representing collections of values, (b) pointwise operations on the elements in the collections, and (c) collection-level operations. The parallelism may either be specified explicitly or derived automatically by the compiler. Parallelism detection involves analyzing the dependence between computations. Computations that are independent may be executed in parallel.

We present high-level mathematical equations to describe data-parallel computations succinctly and precisely. Equations describe the kernels of many applications. Moreover, most scientific and mathematical computations, for example, matrix multiplication, LU-decomposition, Cholesky factorization, Kalman filtering, as well as many algorithms arising in RNA secondary structure prediction, dynamic programming, and so on, are naturally expressed as equations.

It is also widely known that high-level programming languages increase programmer productivity and software life cycles. The cost of this convenience comes in the form of a performance penalty compared to lower-level implementations. With the subsequent improvement of compilation technology to accommodate these higher-level constructs, this performance gap narrows. For example, most programmers never use assembly language today. As a compilation challenge, the advantages of programmability offered by equations need to be supplemented by performance. After our presentation of an equational language, we will present automatic analyses and transformations to reduce the asymptotic complexity and to parallelize our specifications. Finally, we will present a brief description of the generation of imperative code from optimized equational specifications. The efficiency of the generated imperative code is comparable to hand-optimized implementations.

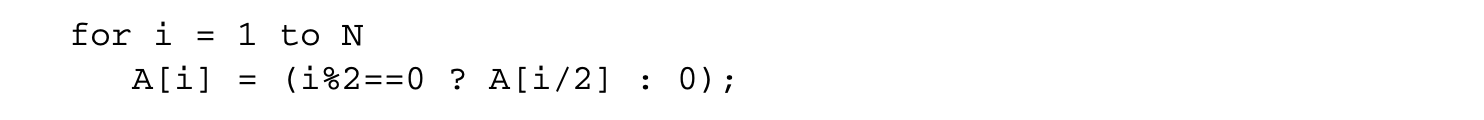

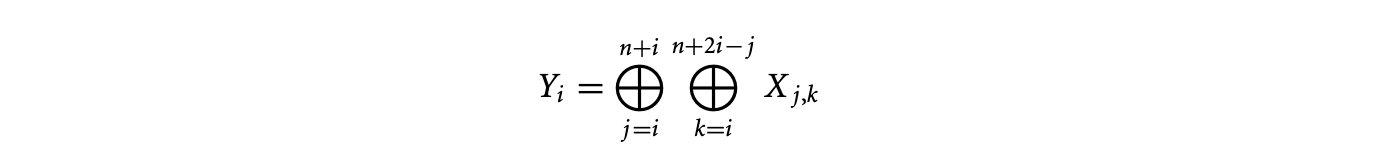

For an example of an equational specification and its automatic simplification, consider the following:

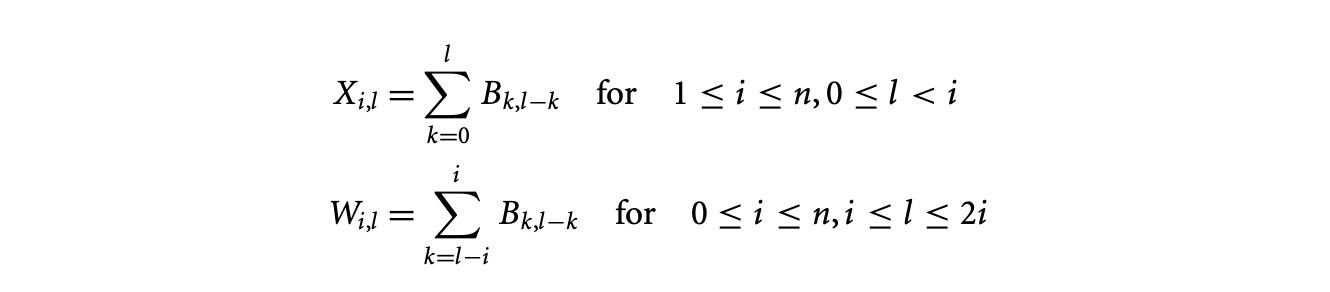

Here, the variable is defined over a line segment, and the variables and , over a triangle and a square, respectively. These are the previously mentioned collections of values and are also called the domains of the respective variables. The dependence in the given computation is such that the value of at requires the value of at and the value of at for all valid values of and .

Here, the variable is defined over a line segment, and the variables and , over a triangle and a square, respectively. These are the previously mentioned collections of values and are also called the domains of the respective variables. The dependence in the given computation is such that the value of at requires the value of at and the value of at for all valid values of and .

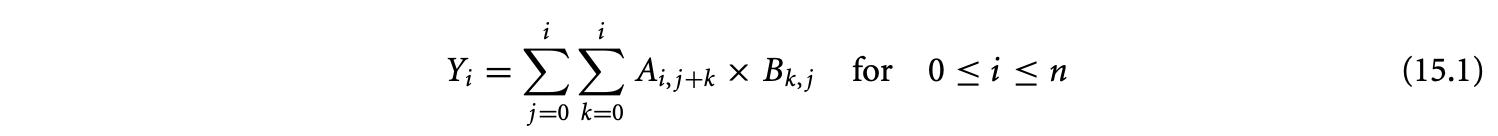

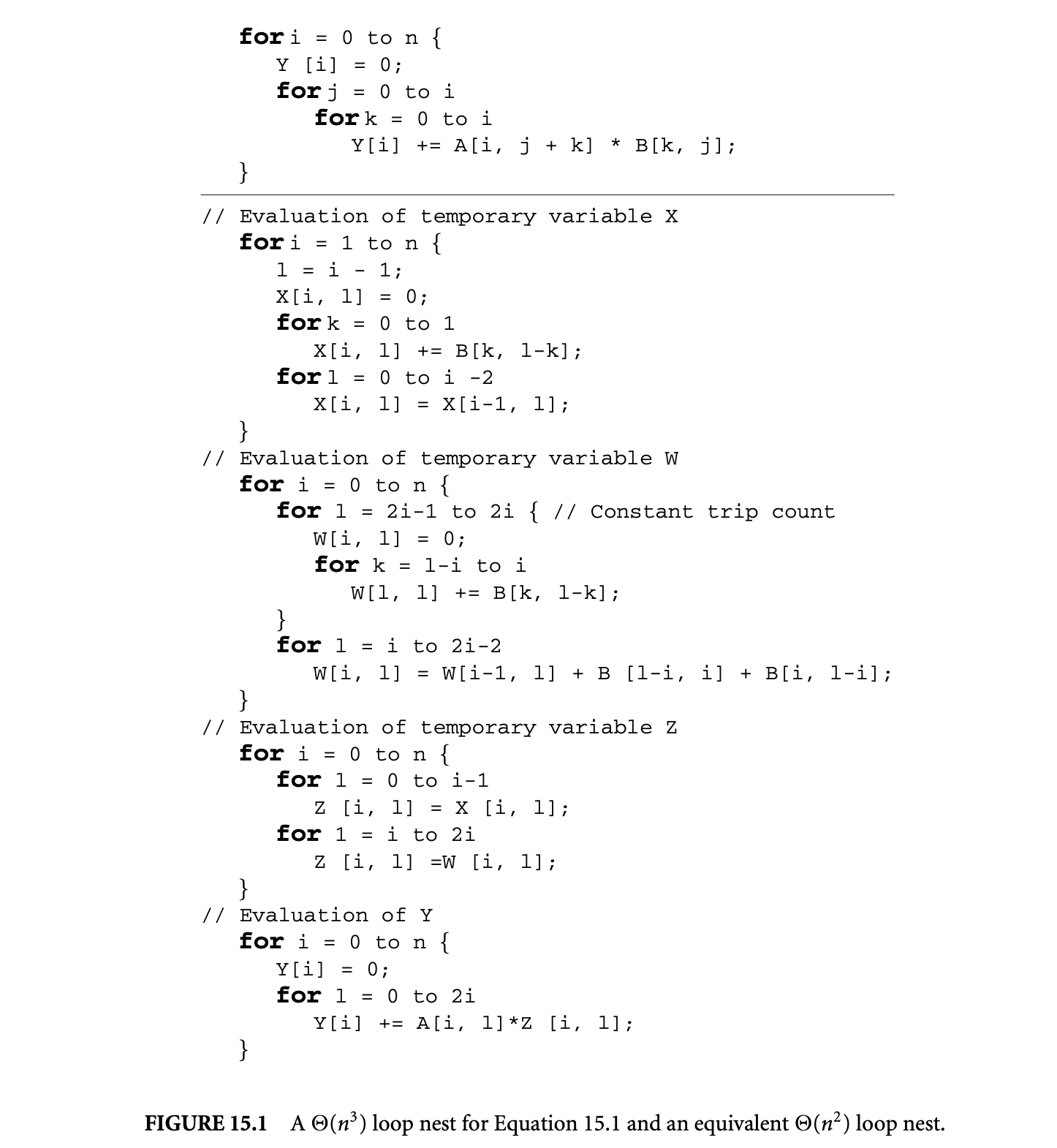

An imperative code segment that implements this equation is given in Figure 15.1. The triply nested loop (with linear bounds) indicates a asymptotic complexity for such an implementation. However, a implementation of Equation 15.1 exists and can be derived automatically. The code for this "simplified" specification is provided as well in Figure 15.1. The required sequence of transformations required to optimize the initial specification is given in Section 15.2.4. These transformations have been developed at the level of equations.

The equations presented so far have been of a very special form. It is primarily this special form that enables the development of sophisticated analyses and transformations. Analyses on general equations are often impossible. The class of equations that we consider consist of (a) variables defined on -polyhedral domains with (b) dependences in the form of affine functions. These restrictions enable us to use linear algebraic theory and techniques. In Section 15.2.1, we present -polyhedra and associated mathematical objects in detail that abstract the iteration domains of loop nests. Then we show the advantages of manipulating -polyhedra over integer polyhedra. A language to express equations over -polyhedral domains is presented in Section 15.2.3. The latter half of this section presents transformations to automatically simplify and parallelize equations. Finally, we provide a brief explanation of the transformations in the backend and code generation.

15.2.1 Mathematical Background1

Footnote 1: Parts of this section are adapted from [37], © 2007, Association for Computing Machinery, Inc., included by permission.

First, we review some mathematical background on matrices and decribe terminology. As a convention, we denote matrices with upper-case letters and vectors with lower-case. All our matrices and vectors have integer elements. We denote the identity matrix by . Syntactically, the different elements of a vector will be written as a list.

We use the following concepts and properties of matrices:

- The kernel of a matrix , written as , is the set of all vectors such that .

- The column (respectively row) rank of a matrix is the maximal number of linearly independent columns (respectively rows) of .

- A matrix is unimodular if it is square and its determinant is either or .

Figure 15: A loop nest for Equation 15.1 and an equivalent loop nest.

- Two matrices and are said to be column equivalent or right equivalent if there exists a unimodular matrix such that .

- A unique representative element in each set of matrices that are column equivalent is the one in Hermite normal form (HNF).

Definition 15.1:

An matrix with column rank is in HNF if:

-

For columns ,, , the first nonzero element is positive and is below the first positive element for the previous column.

-

In the first columns, all elements above the first positive element are zero.

-

The first positive entry in columns ,, is the maximal entry on its row. All elements are nonnegative in this row.

-

Columns are zero columns.

A template of a matrix in HNF is provided above. In the template, denotes the maximum element in the corresponding row, denotes elements that are not the maximum element, and denotes any integer. Both and are nonnegative elements.

For every matrix , there exists a unique matrix that is in HNF and column equivalent to , that is, there exists a unimodular matrix such that . Note that the provided definition of the HNF does not require the matrix to have full row rank.

15.2.1 Integer Polyhedra

An integer polyhedron, , is a subset of that can be defined by a finite number of affine inequalities (also called affine constraints or just constraints when there is no ambiguity) with integer coefficients. We follow the convention that the affine constraint is given as , where . The integer polyhedron, , satisfying the set of constraints , is often written as , where is an matrix and is a -vector.

Example 15.1:

Consider the equation

The domains of the variables , , and are, respectively, the sets , , and . These sets are polyhedra, and deriving the aforementioned representation simply requires us to obtain, through elementary algebra, all affine constraints of the correct form, yielding , , and , respectively. Nevertheless, these are less intuitive, and in our presentation, we will not conform to the formalities of representation.

The domains of the variables , , and are, respectively, the sets , , and . These sets are polyhedra, and deriving the aforementioned representation simply requires us to obtain, through elementary algebra, all affine constraints of the correct form, yielding , , and , respectively. Nevertheless, these are less intuitive, and in our presentation, we will not conform to the formalities of representation.

A subtle point to note here is that elements of polyhedral sets are tuples of integers. The index variables , , , and are simply place holders and can be substituted by other unused names. The domain of can also be specified by the set .

We shall use the following properties and notation of integer polyhedra and affine constraints:

- For any two coefficients and , where , and , is said to be a convex combination of and . If and are two iteration points in an integer polyhedron, , then any convex combination of and that has all integer elements is also in .

- The constraint of is said to be saturated iff.

- The lineality space of is defined as the linear part of the largest affine subspace contained in . It is given by .

- The context of is defined as the linear part of the smallest affine subspace that contains . If the saturated constraints in are the rows of , then the context of is .

15.2.1.1 Parameterized Integer Polyhedra

Recall Equation 15.1. The domain of is given by the set . Intuitively, the variable is seen as a size parameter that indicates the problem instance under consideration. If we associate every iteration point in the domain of with the appropriate problem instance, the domain of would be described by the set . Thus, a parameterized integer polyhedron is an integer polyhedron where some indices are interpreted as size parameters.

An equivalence relation is defined on the set of iteration points in a parameterized polyhedron such that two iteration points are equivalent if they have identical values of size parameters. By this relation, a parameterized polyhedron is partitioned into a set of equivalence classes, each of which is identified by the vector of size parameters. Equivalence classes correspond to program instances and are, thus, called instances of the parameterized polyhedron. We identify size parameters by omitting them from the index list in the set notation of a domain.

15.2.1.2 Affine Images of Integer Polyhedra

An (standard) affine function, , is a function from iteration points to iteration points. It is of the form , where is an matrix and is an -vector.

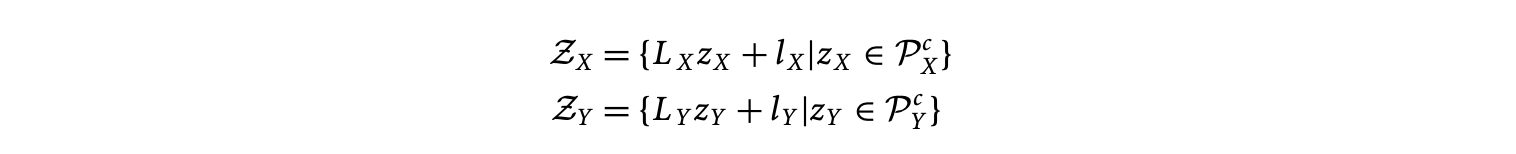

Consider the integer polyhedron and the standard affine function given above. The image of under is of the form . These are the so-called linearly bound lattices (LBLs). The family of LBLs is a strict superset of the family of integer polyhedra. Clearly, every integer polyhedra is an LBL with and . However, for an example of an LBL that is not an integer polyhedron refer to Figure 15.2.

Figure 15.2: The LBL corresponding to the image of the polyhedron by the affine function does not contain the iteration point 8 but contains 7 and 9. Since 8 is a convex combination of 7 and 9, the set is not an integer polyhedron. Adapted from [37], © 2007, Association for Computing Machinery, Inc., included by permission.

15.2.1.3 Affine Lattices

Often, the domain over which an equation is specified, or the iteration space of a loop program, does not contain every integer point that satisfies a set of affine constraints.

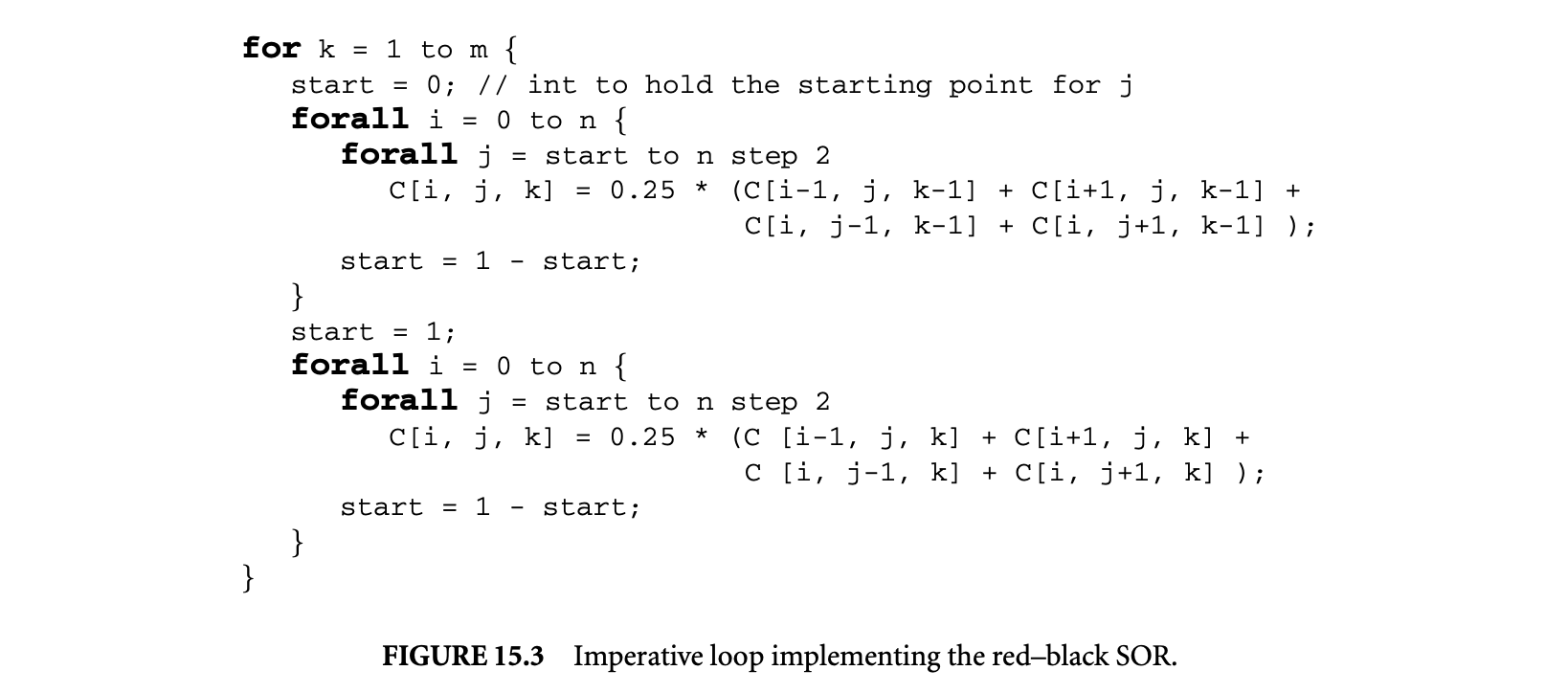

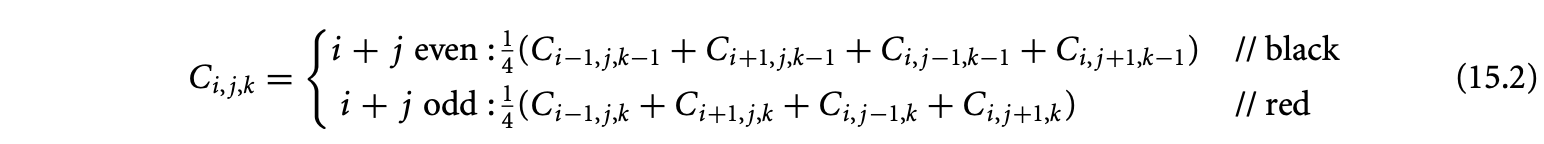

Example 15.2: Consider the red-black SOR for the iterative computation of partial differential equations. Iterations in the plane are divided into "red" points and "black" points, similar to the layout of squares in a chess board. First, black points (at even ) are computed using the four neighboring red points (at odd ), and then the red points are computed using its four neighboring black points. These two phases are repeated until convergence. Introducing an additional dimension, to denote the iterative application of the two phases, we get the following equation:

where the domain of is , and are size parameters, and is given as input. The imperative loop nest that implements this equation is given in Figure 15.3.

where the domain of is , and are size parameters, and is given as input. The imperative loop nest that implements this equation is given in Figure 15.3.

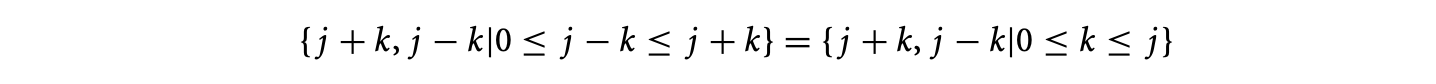

We see that the first (respectively second) branch of the equation is not defined over all iteration points that satisfy a set of affine constraints, namely, , but over points that additionally satisfy (respectively ). This additional constraint in the first branch of the equation is satisfied precisely by the iteration points that can be expressed as an integer linear combination of the vectors . The vectors and are the generators of the lattice on which these iteration points lie.

The additional constraint in the second branch of the equation is satisfied precisely by iteration points that can be expressed as the following affine combination:

Formally, the lattice generated by a matrix is the set of all integer linear combinations of the columns of . If the columns of a matrix are linearly independent, they constitute a basis of the generated lattice. The lattices generated by two-dimensionally identical matrices are equal iff the matrices are column equivalent. In general, the lattices generated by two arbitrary matrices are equal iff the submatrices corresponding to the nonzero columns in their HNF are equal.

Formally, the lattice generated by a matrix is the set of all integer linear combinations of the columns of . If the columns of a matrix are linearly independent, they constitute a basis of the generated lattice. The lattices generated by two-dimensionally identical matrices are equal iff the matrices are column equivalent. In general, the lattices generated by two arbitrary matrices are equal iff the submatrices corresponding to the nonzero columns in their HNF are equal.

As seen in the previous example, we need a generalization of the lattices generated by a matrix, additionally allowing offsets by constant vectors. These are called affine lattices. An affine lattice is a subset of of the form , where and are an matrix and -vector, respectively. We call the coordinates of the affine lattice.

The affine lattices and are equal iff the lattices generated by and are equal and for some constant vector . The latter requirement basically enforces that the offset of one lattice lies on the other lattice.

15.2.1.4 -Polyhedra

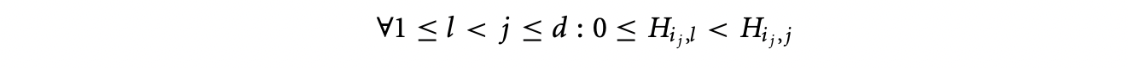

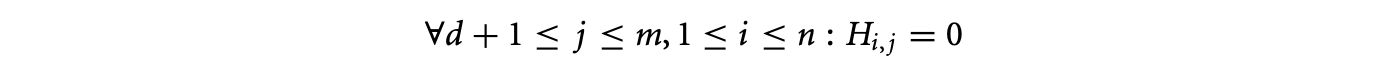

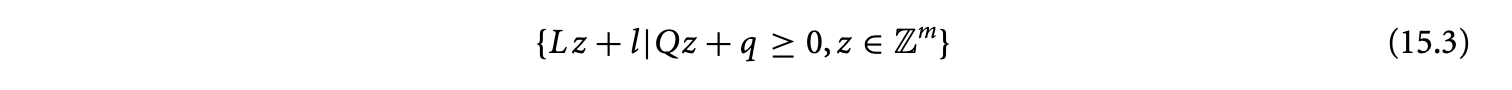

-polyhedron is the intersection of an integer polyhedron and an affine lattice. Recall the set of iteration points defined by either branch of the equation for the red-black SOR. As we saw above, these iteration points lie on an affine lattice in addition to satisfying a set of affine constraints. Thus, the set of these iteration points is precisely a -polyhedron. When the affine lattice is the canonical lattice, , a -polyhedron is also an integer polyhedron. We adopt the following representation for -polyhedra:

where has full column rank, and the polyhedron has a context that is the universe, . is called the coordinate polyhedron of the -polyhedron. The -polyhedron for which has no columns has a coordinate polyhedron in .

where has full column rank, and the polyhedron has a context that is the universe, . is called the coordinate polyhedron of the -polyhedron. The -polyhedron for which has no columns has a coordinate polyhedron in .

We see that every -polyhedron is an LBL simply by observing that the representation for a -polyhedron is in the form of an affine image of an integer polyhedron. However, the LBL in Figure 15.2 is clearly not a -polyhedron. There does not exist any lattice with which we can intersect the integer polyhedron to get the set of iteration points of the LBL. Thus, the family of LBLs is a strict superset of the family of -polyhedra.

Our representation for -polyhedra as affine images of integer polyhedra is specialized through the restriction to and . We may interpret the -polyhedral representation in Equation 15.3 as follows. It is said to be based on the affine lattice given by . Iteration points of the -polyhedral domain are points of the affine lattice corresponding to valid coordinates. The set of valid coordinates is given by the coordinate polyhedron.

15.2.1.4.1 Parameterized -Polyhedra

parameterized -polyhedron is a -polyhedron where some rows of its corresponding affine lattice are interpreted as size parameters. An equivalence relation is defined on the set of iteration points in a parameterized -polyhedron such that two iteration points are equivalent if they have identical value of size parameters. By this relation, a parameterized -polyhedron is partitioned into a set of equivalence classes, each of which is identified by the vector of size parameters. Equivalence classes correspond to program instances and are, thus, called instances of the parameterized -polyhedron.

For the sake of explanation, and without loss of generality, we may impose that the rows that denote size parameters are before all non-parameter rows. The equivalent -polyhedron based on the HNF of such a lattice has the important property that all points of the coordinate polyhedron with identical values of the first few indices belong to the same instance of the parameterized -polyhedron.

Example 15.3

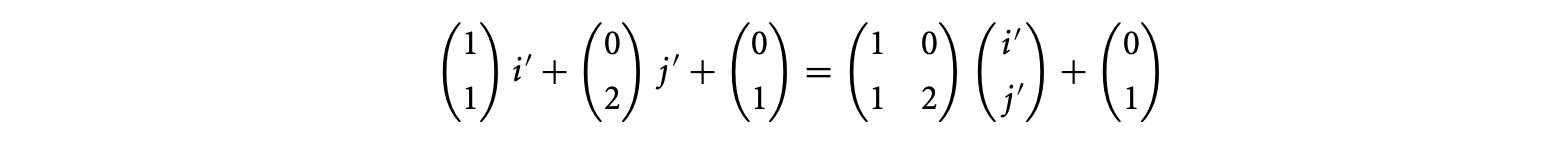

Consider the -polyhedron given by the intersection of the polyhedron and the lattice .1 It may be written as

For both the polyhedron and the affine lattice, the specification of the coordinate space is redundant. It can be derived from the number of indices and is therefore dropped for the sake of brevity.

Now, suppose the first index, , in the polyhedron is the size parameter. As a result, the first row in the lattice corresponding to the -polyhedron is the size parameter. The HNF of this lattice is . The equivalent -polyhedron is

The iterations of this -polyhedron belong to the same program instance iff they have the same coordinate index . Note that valid values of the parameter row trivially have a one-to-one correspondence with values of , identity being the required bijection. In the general case, however, this is not the case. Nevertheless, the required property remains invariant. For example, consider the following -polyhedron with the first two rows considered as size parameters:

Here, valid values of the parameter rows have a one-to-one correspondence with the values of and , but it is impossible to obtain identity as the required bijection.

Here, valid values of the parameter rows have a one-to-one correspondence with the values of and , but it is impossible to obtain identity as the required bijection.

15.2.1.5 Affine Lattice Functions

Affine lattice functions are of the form , where has full column rank. Such functions provide a mapping from the iteration to the iteration . We have imposed that have full column rank to guarantee that be a function and not a relation, mapping any point in its domain to a unique point in its range. All standard affine functions are also affine lattice functions.

The mathematical objects introduced here are used to abstract the iteration domains and dependences between computations. In the next two sections, we will show the advantages of manipulating equations with -polyhedral domains instead of polyhedral domains and present a language for the specification of such equations.

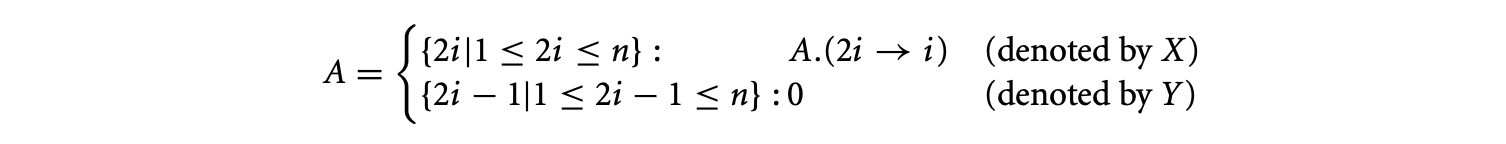

15.2.2 The -Polyhedral Model

We will now develop the -polyhedral model that enables the specification, analysis, and transformation of equations described over -polyhedral domains. It has its origins in the polyhedral model that has been developed for over a quarter century. The polyhedral model has been used in a variety of contexts, namely, automatic parallelization of loop programs, locality, hardware generation, verification, and, more recently, automatic reduction of asymptotic computational complexity. However, the prime limitation of the polyhedral model lay in its requirement for dense iteration domains. This motivated the extension to -polyhedral domains. As we have seen in the red-black SOR, -polyhedral domains describe the iterations of a regular loop with non-unit stride.

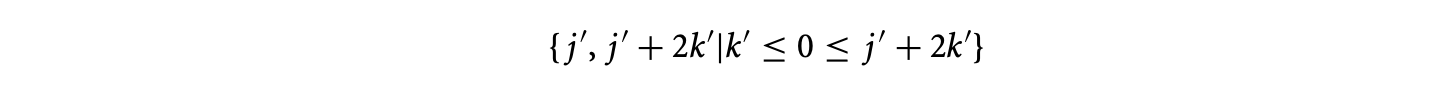

In addition to allowing more general specifications, the -polyhedral model enables more sophisticated analyses and transformations by providing greater information in the specifications, namely, pertaining to lattices. The example below demonstrates the advantages of manipulating -polyhedral domains. The variable is defined over the domain .2

Code fragments in this section are adapted from [37], © 2007, Association for Computing Machinery, Inc., included by permission.

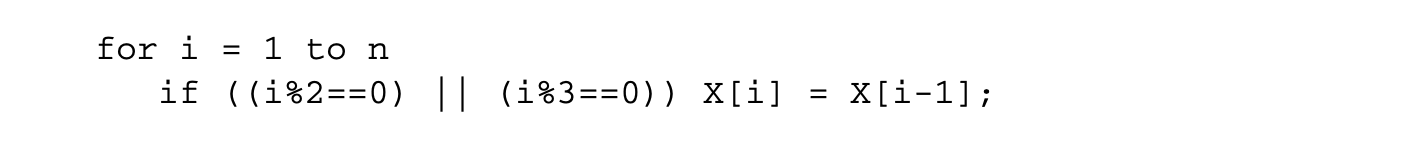

In the loop, only iteration points that are a multiple of 2 or 3 execute the statement . The iteration at may be excluded from the loop nest. Generalizing, any iteration that can be written in the form may be excluded from the loop nest. The same argument applies to iterations that can be written in the form . As result of these "holes," all iterations at may be executed in parallel at the first time step. The iterations at may also be executed in parallel at the first time step. At the next time step, we may execute iterations at and finally at iterations . The length of the longest dependence chain is 3. Thus, the loop nest can be parallelized to execute in constant time as follows:

In the loop, only iteration points that are a multiple of 2 or 3 execute the statement . The iteration at may be excluded from the loop nest. Generalizing, any iteration that can be written in the form may be excluded from the loop nest. The same argument applies to iterations that can be written in the form . As result of these "holes," all iterations at may be executed in parallel at the first time step. The iterations at may also be executed in parallel at the first time step. At the next time step, we may execute iterations at and finally at iterations . The length of the longest dependence chain is 3. Thus, the loop nest can be parallelized to execute in constant time as follows:

However, our derivation of parallel code requires to manipulate -polyhedral domains. A polyhedral approximation of the problem would be unable to result in such a parallelization.

However, our derivation of parallel code requires to manipulate -polyhedral domains. A polyhedral approximation of the problem would be unable to result in such a parallelization.

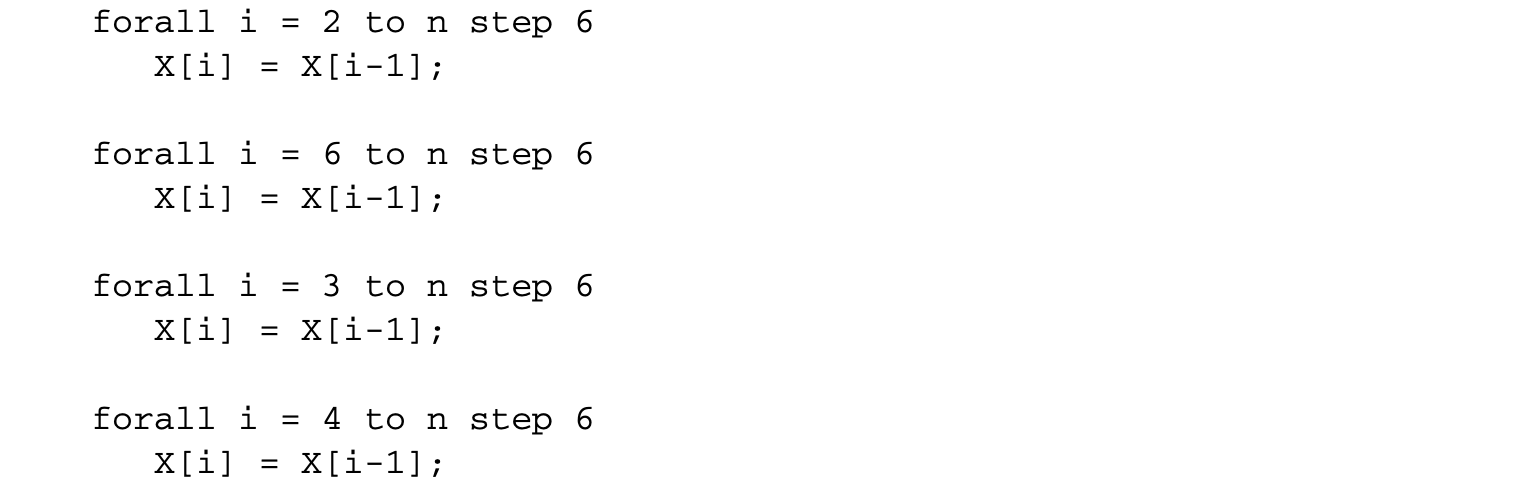

Finally, the -polyhedral model allows specifications with a more general dependence pattern than the specifications in the polyhedral model. Consider the following equation that cannot be expressed in the polyhedral model.

where and the corresponding loop is

where and the corresponding loop is

This program exhibits a dependence pattern that is richer than the affine dependences of the polyhedral model. In other words, it is impossible to write an equivalent program in the polyhedral model, that is, without the use of the mod operator or non-unit stride loops, that can perform the required computation. One may consider replacing the variable with two variables and corresponding to the even and odd points of such that and . However, the definition of now requires the mod operator, because and .

This program exhibits a dependence pattern that is richer than the affine dependences of the polyhedral model. In other words, it is impossible to write an equivalent program in the polyhedral model, that is, without the use of the mod operator or non-unit stride loops, that can perform the required computation. One may consider replacing the variable with two variables and corresponding to the even and odd points of such that and . However, the definition of now requires the mod operator, because and .

Thus, the -polyhedral model is a strict generalization of the polyhedral model and enables more powerful optimizations.

15.2.3 Equational Language

In our presentation of the red-black SOR in Section 15.2.1.3, we studied the domains of the two branches of Equation 15.2. More specifically, these are the branches of the case expression in the right-hand side (rhs) of the equation. In general, our techniques require the analysis and transformation of the subexpressions that constitute the rhs of equations, treating expressions as first-class objects.

For example, consider the simplification of Equation 15.1. As written, the simplification transforms the accumulation expression in the rhs of the equation. However, one would expect the technique to be able to decrease the asymptotic complexity of the following equation as well.

Generalizing, one would reasonably expect the existence of a technique to reduce the complexity of evaluation of the accumulation subexpression (), irrespective of its "level." This motivates a homogeneous treatment of the subexpression at any level. At the lowest level of specification,a subexpression is a variable (or a constant) associated with a domain. Generalizing, we associate domains to arbitrary subexpressions.

Generalizing, one would reasonably expect the existence of a technique to reduce the complexity of evaluation of the accumulation subexpression (), irrespective of its "level." This motivates a homogeneous treatment of the subexpression at any level. At the lowest level of specification,a subexpression is a variable (or a constant) associated with a domain. Generalizing, we associate domains to arbitrary subexpressions.

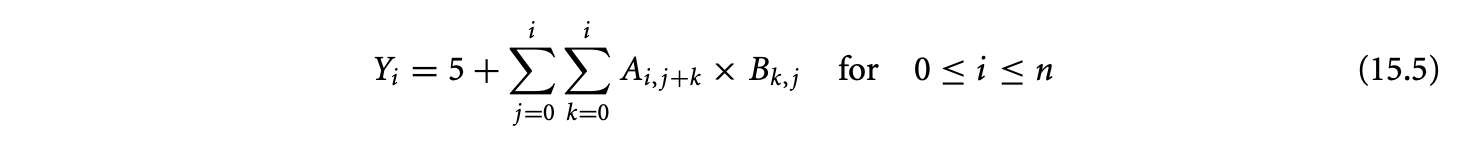

The treatment of expressions as first-class objects leads to the design of a functional language where programs are a finite list of (mutually recursive) equations of the form , where both Var and Expr denote mappings from their respective domains to a set of values (similar to multidimensional arrays). A variable is defined by at most one equation. Expressions are constructed by the rules given in Table 15.1, column 2. The domains of all variables are declared, the domains of constants are either declared or defined over by default, and the domains of expressions are derived by the rules given in Table 15.1, column 3. The function specified in a dependence expression is called the dependence function (or simply a dependence), and the function specified in a reduction is called the projection function (or simply a projection).

The treatment of expressions as first-class objects leads to the design of a functional language where programs are a finite list of (mutually recursive) equations of the form , where both Var and Expr denote mappings from their respective domains to a set of values (similar to multidimensional arrays). A variable is defined by at most one equation. Expressions are constructed by the rules given in Table 15.1, column 2. The domains of all variables are declared, the domains of constants are either declared or defined over by default, and the domains of expressions are derived by the rules given in Table 15.1, column 3. The function specified in a dependence expression is called the dependence function (or simply a dependence), and the function specified in a reduction is called the projection function (or simply a projection).

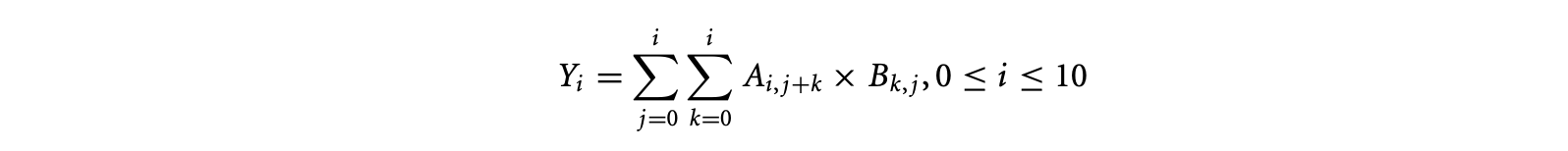

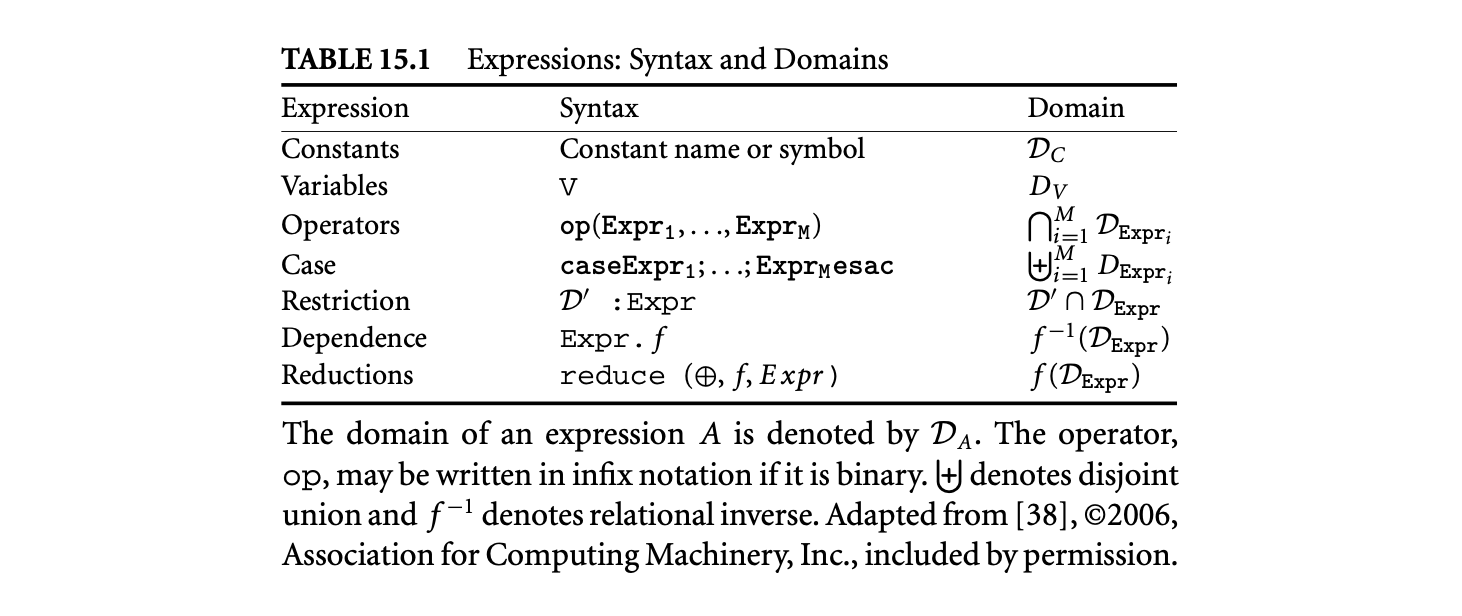

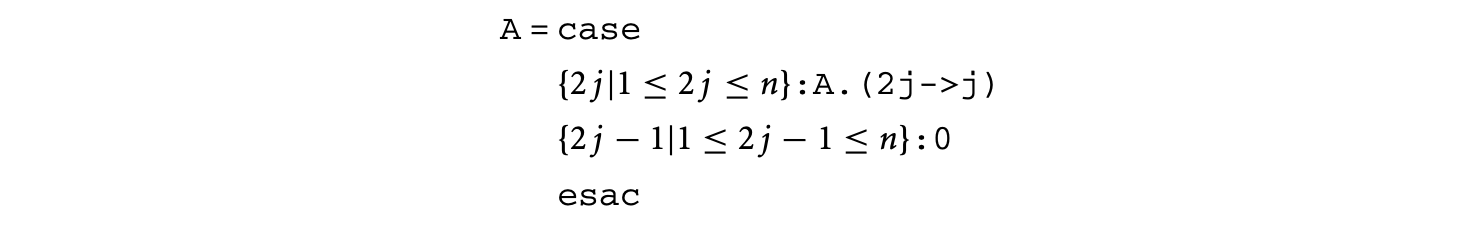

In this language, Equation 15.5 would be a syntactically sugared version of the following concrete problem.

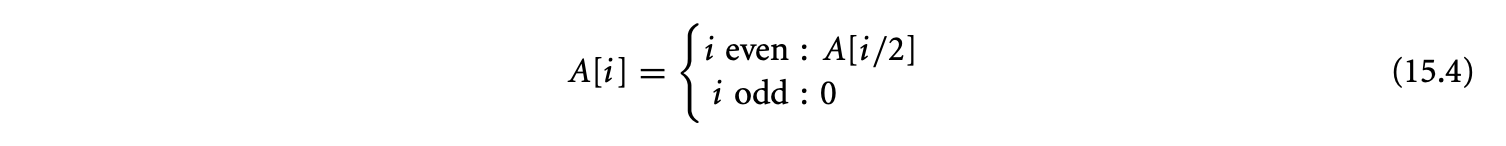

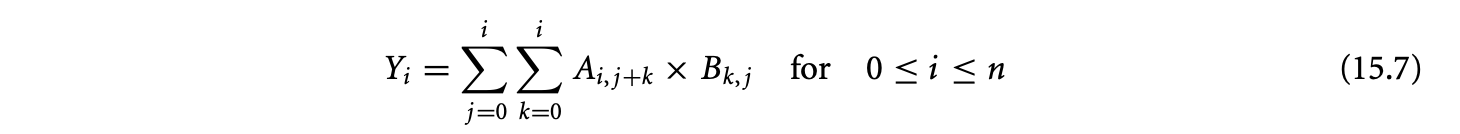

In the equation above, 5 is a constant expression defined over and Y, A, and B are variables. In addition to the equation, the domains of Y, A, and B would be declared as the sets , , and , respectively. The reduction expression is the accumulation in Equation 15.5. Summation is expressed by the reduction operator + (other possible reduction operators are *, max, min, or, and, etc.). The projection function (i,j,k->i) specifies that the accumulation is over the space spanned by and resulting in values in the one-dimensional space spanned by . A subtle and important detail is that the expression that is accumulated is defined over a domain in three-dimensional space spanned by , , and (this information is implicit in standard mathematical specifications as in Equation 15.5). This is an operator expression equal to the product of the value of at and at . In the space spanned by , , and , the required dependences on and are expressed through dependence expressions A.(i,j,k->i,j+k) and B.(i,j,k->k,j), respectively. The equation does not contain any case or restrict constructs. For an example of these two constructs, refer back to Equation 15.4. In our equational specification, the equation would be written as

In the equation above, 5 is a constant expression defined over and Y, A, and B are variables. In addition to the equation, the domains of Y, A, and B would be declared as the sets , , and , respectively. The reduction expression is the accumulation in Equation 15.5. Summation is expressed by the reduction operator + (other possible reduction operators are *, max, min, or, and, etc.). The projection function (i,j,k->i) specifies that the accumulation is over the space spanned by and resulting in values in the one-dimensional space spanned by . A subtle and important detail is that the expression that is accumulated is defined over a domain in three-dimensional space spanned by , , and (this information is implicit in standard mathematical specifications as in Equation 15.5). This is an operator expression equal to the product of the value of at and at . In the space spanned by , , and , the required dependences on and are expressed through dependence expressions A.(i,j,k->i,j+k) and B.(i,j,k->k,j), respectively. The equation does not contain any case or restrict constructs. For an example of these two constructs, refer back to Equation 15.4. In our equational specification, the equation would be written as

where the domains of and the constant are and , respectively. There are two branches of the case expression, each of which is a restriction expression. We have not provided domains of any of the subexpressions mentioned above for the sake of brevity. These can be computed using the rules given in Table 15.1, column 3.

where the domains of and the constant are and , respectively. There are two branches of the case expression, each of which is a restriction expression. We have not provided domains of any of the subexpressions mentioned above for the sake of brevity. These can be computed using the rules given in Table 15.1, column 3.

15.2.3.1

Parts of this section are adapted from [38], © 2006, Association for Computing Machinery, Inc., included by permission.

At this point3, we intuitively understand the semantics of expressions. Here, we provide the formal semantics of expressions over their domains of definition. At the iteration point in its domain, the value of:

- A constant expression is the associated constant.

- A variable is either provided as input or given by an equation; in the latter case, it is the value, at , of the expression on its rhs.

- An operator expression is the result of applying on the values, at , of its expression arguments. is an arbitrary, iteration-wise, single valued function.

- A case expression is the value at of that alternative, to whose domain belongs. Alternatives of a case expression are defined over disjoint domains to ensure that the case expression is not under- or overdefined.

- A restriction over is the value of at .

- The dependence expression is the value of at . For the affine lattice function , the value of the (sub)expression at equals the value of at .

- is the application of on the values of at all iteration points in that map to by . Since is an associative and commutative binary operator, we may choose any order of its application.

It is often convenient to have a variable defined either entirely as input or only by an equation. The former is called an input variable and the latter is a computed variable. So far, all our variables have been of these two kinds only. Computed variables are just names for valid expressions.

15.2.3.2 The Family of Domains

Variables (and expressions) are defined over -polyhedral domains. Let us study the compositional constructs in Table 15.1 to get a more precise understanding of the family of -polyhedral domains.

For compound expressions to be defined over the same family of domains as their subexpressions, the family should be closed under intersection (operator expressions, restrictions), union (case expression), and preimage (dependence expressions) and image (reduction expressions) by the family of functions. With closure, we mean that a (valid) domain operation on two elements of the family of domains should result in an element that also belongs to the family. For example, the family of integer polyhedra is closed under intersection but not under images, as demonstrated by the LBL in Figure 15.2. The family of integer polyhedra is closed under intersection since the intersection of two integer polyhedra that lie in the same dimensional space results in an integer polyhedron that satisfies the constraints of both the integer polyhedra.

In addition to intersection, union, and preimage and image by the family of functions, most analyses and transformations (e.g., simplification, code generation, etc.) require closure under the difference of two domains. With closure under the difference of domains, we may always transform any specification to have only input and computed variables.

The family of -polyhedral domains should be closed under the domain operations mentioned above. This constraint is unsatisfied if the elements of this family are -polyhedra. For example, the union of two -polyhedra is not a -polyhedron. Also, the LBL in Figure 15.2 shows that the image of a -polyhedron is not a -polyhedron. However, if the elements of the family of -polyhedral domains are unions of -polyhedra, then all the domain operations mentioned above maintain closure.

15.2.3.3 Parameterized Specifications

Extending the concept of parameterized -polyhedra, it is possible to parameterize the domains of variables and expressions with size parameters. This leads to parameterized equational specifications. Instances of the parameterized -polyhedra correspond to program instances.

Every program instance in a parameterized specification is independent, so all functions should map consumer iterations to producer iterations within the same program instance.

Every program instance in a parameterized specification is independent, so all functions should map consumer iterations to producer iterations within the same program instance.

15.2.3.4 Normalization

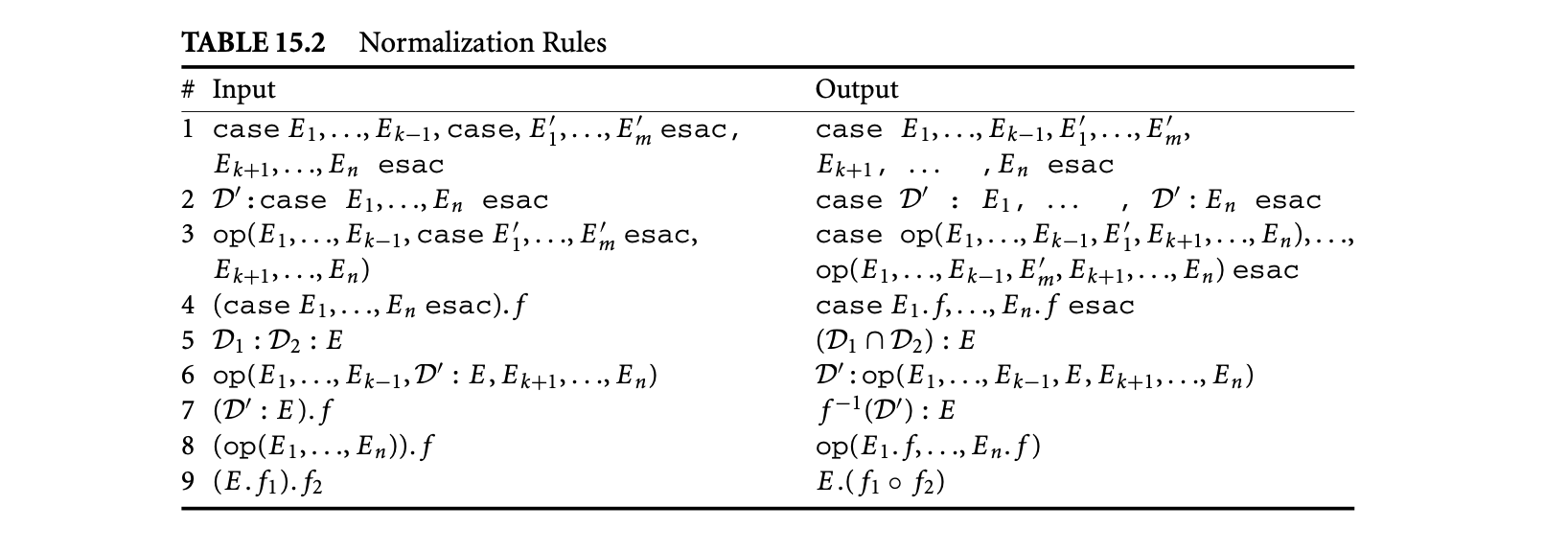

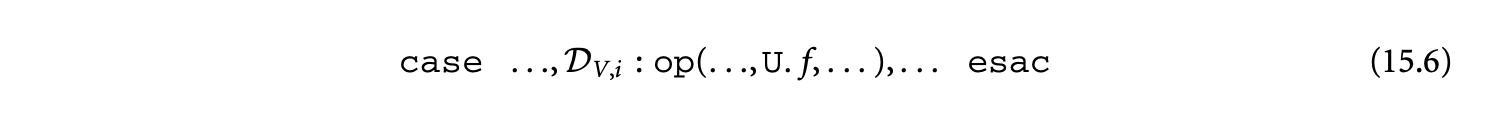

For most analyses and transformations (e.g., the simplification of reductions, scheduling, etc.), we need equations in a special normal form. Normalization is a transformation of an equational specification to obtain an equivalent specification containing only equations of the canonic forms or , where the expression is of the form

and is a variable or a constant.

Such a normalization transformation4 first introduces an equation for every reduce expression, replacing its occurrence with the corresponding local variable. As a result, we get equations of the forms or , where the expression does not contain any reduce subexpressions. Subsequently, these expressions are processed by a rewrite engine to obtain equivalent expressions of the form specified in Equation 15.6. The rules for the rewrite engine are given in Table 15.2. Rules 1 to 4 "bubble" a single case expression to the outermost level, rules 5 to 7 then "bubble" a single restrict subexpression to the second level, rule 8 gets the operator to the penultimate level, and rule 9 is a dependence composition to obtain a single dependence at the innermost level.

and is a variable or a constant.

Such a normalization transformation4 first introduces an equation for every reduce expression, replacing its occurrence with the corresponding local variable. As a result, we get equations of the forms or , where the expression does not contain any reduce subexpressions. Subsequently, these expressions are processed by a rewrite engine to obtain equivalent expressions of the form specified in Equation 15.6. The rules for the rewrite engine are given in Table 15.2. Rules 1 to 4 "bubble" a single case expression to the outermost level, rules 5 to 7 then "bubble" a single restrict subexpression to the second level, rule 8 gets the operator to the penultimate level, and rule 9 is a dependence composition to obtain a single dependence at the innermost level.

More sophisticated normalization rules may be applied, expressing the interaction of reduce expressions with other subexpressions. However, these are unnecessary in the scope of this chapter.

The validity of these rules, in the context of obtaining a valid specification of the language, relies on the closure properties of the family of unions of -polyhedra.

15.2.4 Simplification of Reductions

Parts of this section are adapted from [38], © 2006, Association for Computing Machinery, Inc., included by permission.

We now provide a deeper study of reductions5. Reductions, commonly called accumulations, are the application of an associative and commutative operator to a collection of values to produce a collection of results.

Our use of equations was motivated by the simplification of asymptotic complexity of an equation involving reductions. We first present the required steps for the simplification. Then we will provide an intuitive explanation of the algorithm for simplification. For the sake of intuition, we use the standard mathematical notation for accumulations rather than the reduce expression.

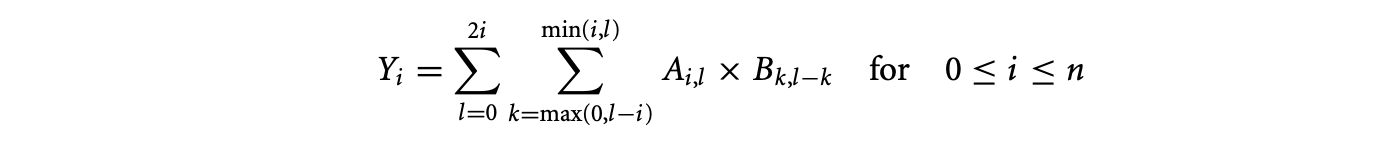

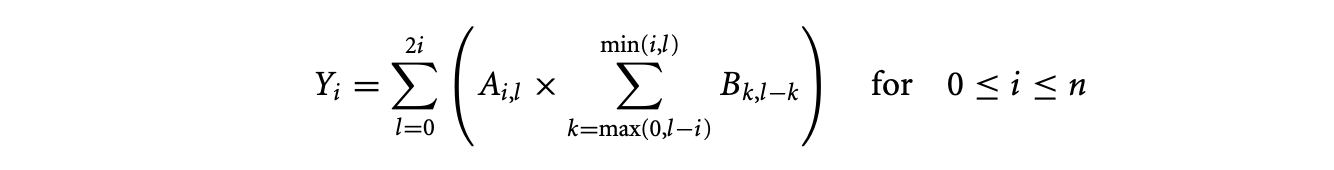

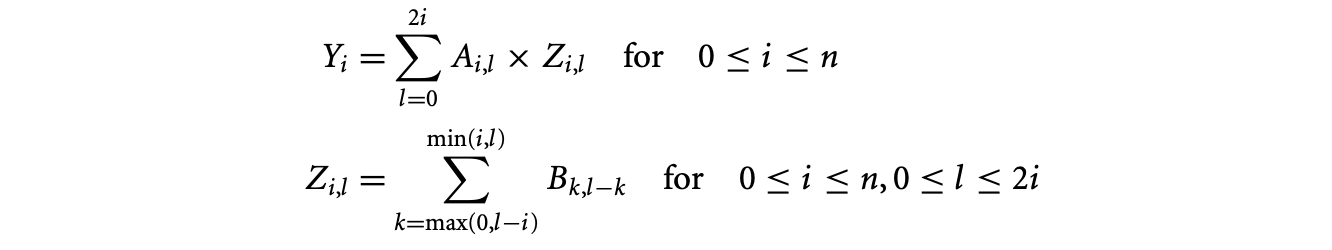

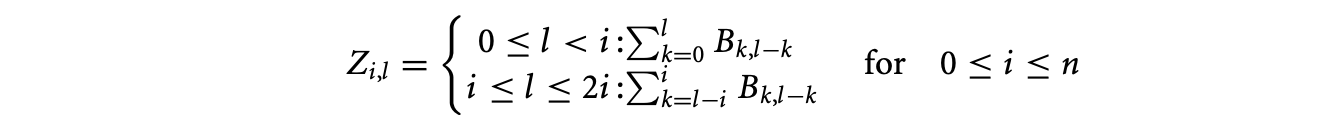

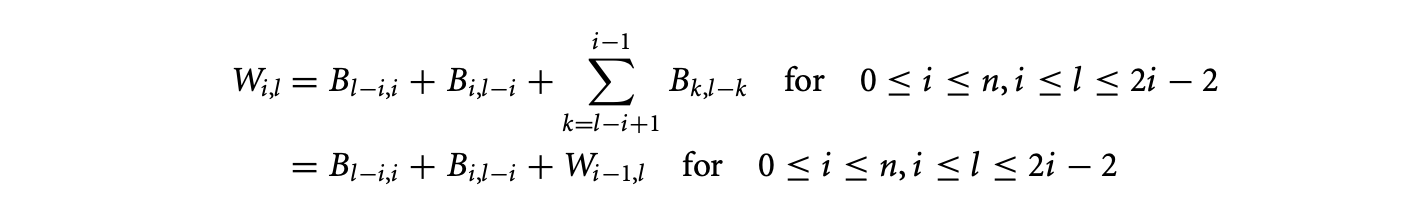

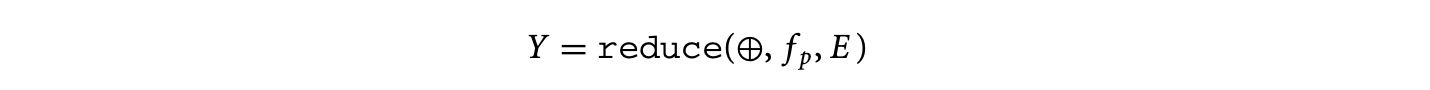

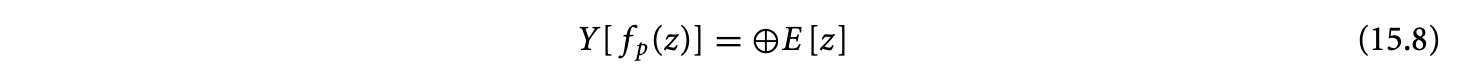

Our initial specification was

The loop nest corresponding to this equation has a complexity. The cubic complexity for this equation can also be directly deduced from the equational specification. Parameterized by , there are three independent7 indices within the summation. The following steps are involved in the derivation of the equivalent specification.

The loop nest corresponding to this equation has a complexity. The cubic complexity for this equation can also be directly deduced from the equational specification. Parameterized by , there are three independent7 indices within the summation. The following steps are involved in the derivation of the equivalent specification.

Footnote 7: With independent, we mean that there are no equalities between indices.

-

Introduce the index variable and replace every occurrence of with . This is a change of basis of the three-dimensional space containing the domain of the expression that is reduced.

The change in the order of summation is legal under our assumption that the reduction operator is associative and commutative.

-

Distribute multiplication over the summation since is independent of , the index of the inner summation.

-

Introduce variable to hold the result of the inner summation

Note that the complexity of evaluating is now quadratic. However, we still have an equational specification that has cubic complexity (for the evaluation of ).

Note that the complexity of evaluating is now quadratic. However, we still have an equational specification that has cubic complexity (for the evaluation of ). -

Separate the summation over to remove min and max operators in the equation for .

-

Introduce variables and for each branch of the equation defining .

Both the equations given above have cubic complexity.

Both the equations given above have cubic complexity. -

Reuse. The complexity for the evaluation of can be decreased by identifying that the expression on the rhs is independent of . We may evaluate each result once (for instance, at a boundary) and then pipeline along as follows.

The initialization takes quadratic time since there are a linear number of results to evaluate and each evaluation takes linear time. Then the pipelining of results over an area requires quadratic time. This decreases the overall complexity of evaluating to quadratic time.

The initialization takes quadratic time since there are a linear number of results to evaluate and each evaluation takes linear time. Then the pipelining of results over an area requires quadratic time. This decreases the overall complexity of evaluating to quadratic time. -

Scan detection. The simplification of occurs when we identify

The values are, once again, initialized in quadratic time at a boundary (here, ). The scan takes constant time per iteration over an area and can be performed in quadratic time as well, thereby decreasing the complexity for the evaluation of to quadratic time.

The values are, once again, initialized in quadratic time at a boundary (here, ). The scan takes constant time per iteration over an area and can be performed in quadratic time as well, thereby decreasing the complexity for the evaluation of to quadratic time. -

Summarizing, we have the following system of equations:

These equations directly correspond to the optimized loop nest given in Figure 15. We have not optimized these equations or the loop nest any further for the sake for clarity, and moreso, because we only want to show an asymptotic decrease in complexity. However, a constant-fold improvement in the asymptotic complexity (as well as the memory requirement) can be obtained by eliminating the variable (or, alternatively, the two variables and ).

These equations directly correspond to the optimized loop nest given in Figure 15. We have not optimized these equations or the loop nest any further for the sake for clarity, and moreso, because we only want to show an asymptotic decrease in complexity. However, a constant-fold improvement in the asymptotic complexity (as well as the memory requirement) can be obtained by eliminating the variable (or, alternatively, the two variables and ).

We now provide an intuitive explanation of the algorithm for simplification. Consider the reduction

where is defined over the domain . The accumulation space of the above equation is characterized by . Any two points and that contribute to the same element of the result, , satisfy . To aid intuition, we may also write this reduction as

where is defined over the domain . The accumulation space of the above equation is characterized by . Any two points and that contribute to the same element of the result, , satisfy . To aid intuition, we may also write this reduction as

is the "accumulation," using the operator, of the values of at all points that have the same image . Now, if has a distinct value at all points in its domain, they must all be computed, and no optimization is possible. However, consider the case where the expression exhibits reuse: its value is the same at many points in . Reuse is characterized by , the kernel of a many-to-one affine function, ;

is the "accumulation," using the operator, of the values of at all points that have the same image . Now, if has a distinct value at all points in its domain, they must all be computed, and no optimization is possible. However, consider the case where the expression exhibits reuse: its value is the same at many points in . Reuse is characterized by , the kernel of a many-to-one affine function, ;

the value of at any two points in is the same if their difference belongs to . We can denote this reuse by , where is a variable with domain . In our language, this would be expressed by the dependence expression . The canonical equation that we analyze is

the value of at any two points in is the same if their difference belongs to . We can denote this reuse by , where is a variable with domain . In our language, this would be expressed by the dependence expression . The canonical equation that we analyze is

Its nominal complexity is the cardinality of the domain of . The main idea behind our method is based on analyzing (a) , the domain of the expression inside the reduction, (b) its reuse space, and (c) the accumulation space.

Its nominal complexity is the cardinality of the domain of . The main idea behind our method is based on analyzing (a) , the domain of the expression inside the reduction, (b) its reuse space, and (c) the accumulation space.

15.2.4.1 Core Algorithm

Consider two adjacent instances of the answer variable, and along , where is a vector in the reuse space of . The set of values that contribute to and overlap. This would enable us to express in terms of . Of course, there would be residual accumulations on values outside the intersection that must be "added" or "subtracted" accordingly. We may repeat this for other values of along . The simplification results from replacing the original accumulation by a recurrence on and residual accumulations. For example, in the simple scan, , the expression inside the summation has reuse along . Taking , we get .

The geometric interpretation of the above reasoning is that we translate by a vector in the reuse space of . Let us call the translated domain . The intersection of and is precisely the domain of values, the accumulation over which can be avoided. Their differences account for the residual accumulations. In the simple scan explained above, the residual domain to be added is , and the domain to be subtracted is empty. The residual accumulations to be added or subtracted are determined only by and .

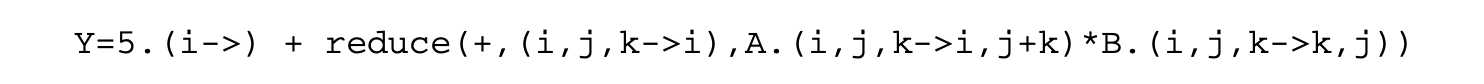

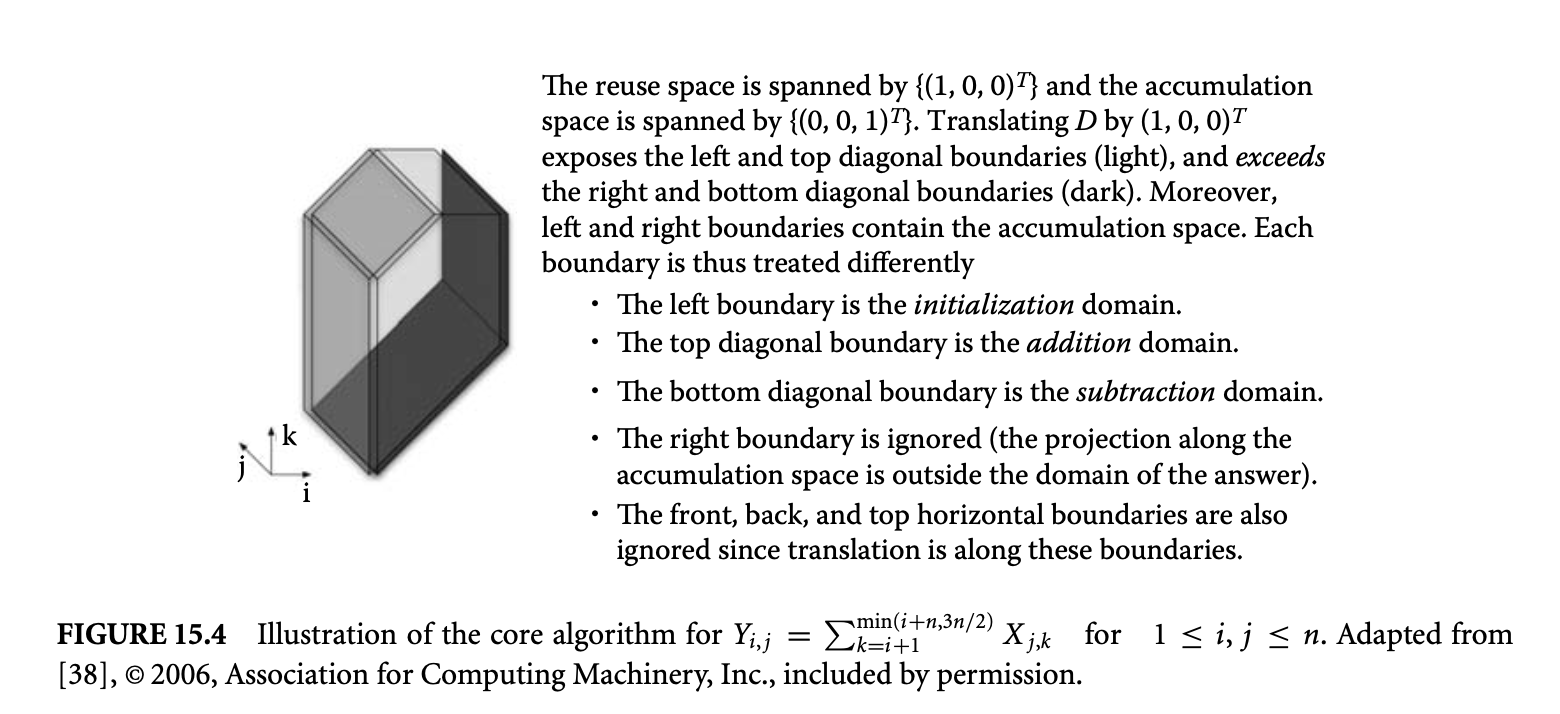

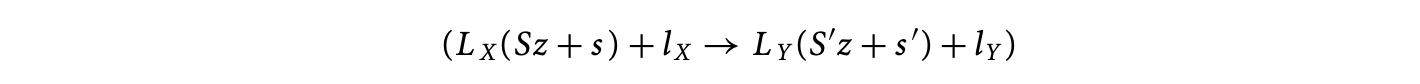

This leads to Algorithm 15.1 (also see Figure 15.4).

Algorithm 15.1 Intuition of the Core Algorithm6

Adapted from [38], © 2006, Association for Computing Machinery, Inc., included by permission.

- Choose , a vector in , along which the reuse of is to be exploited. In general, is multidimensional and therefore there may be infinitely many choices.

- Determine the domains , and corresponding to the domain of initialization, and the residual domains to subtract and to add, respectively. The choice of is made such that the cardinalities of these three domains are polynomials whose degree is strictly less than that for the original accumulation. This leads to simplification of the complexity.

Figure 15.4: Illustration of the core algorithm for for . Adapted from [38], © 2006, Association for Computing Machinery, Inc., included by permission.

- For these three domains, , , and , define the three expressions, , , and , consisting of the original expression , restricted to the appropriate subdomain.

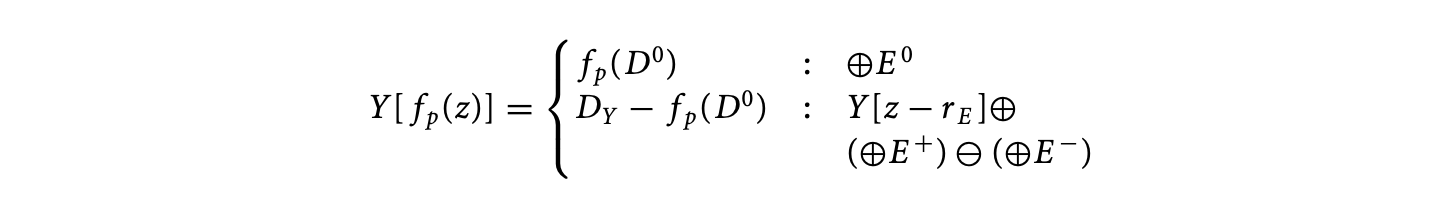

- Replace the original equation by the following recurrence:

- Apply steps 1 to 4 recursively on the residual reductions over , , or if they exhibit further reuse.

Note that Algorithm 15.1 assumes that the reduction operation admits an inverse; that is, "subtraction" is defined. If this is not the case, we need to impose constraints on the direction of reuse to exploit: essentially, we require that the domain is empty. This leads to a feasible space of exploitable reuse.

15.2.4.2 Multidimensional Reuse

When the reuse space as well as the accumulation space are multidimensional, there are some interesting interactions. Consider the equation for . It has two-dimensional reuse (in the plane), and the accumulation is also two-dimensional (in the plane). Note that the two subspaces intersect, and this means that in the direction, not only do all points have identical values, but they also all contribute to the same answer. From the bounds on the summation we see that there are exactly such values, so the inner summation is just , because multiplication is a higher-order operator for repeated addition of identical values (similar situations arise with other operators, e.g., power for multiplication, identity for the idempotent operator max, etc.). We have thus optimized the computation to . However, our original equation had two dimensions of reuse, and we may wonder whether further optimization is possible. In the new equation , the body is the product of two subexpressions, and . They both have one dimension of reuse, in the and directions, respectively, but their product does not. No further optimization is possible for this equation.

However, if we had first exploited reuse along , we would have obtained the simplified equation , initialized with . The residual reduction here is itself a scan, and we may recurse the algorithm to obtain and initialized with . Thus, our equation can be computed in linear time. This shows how the choice of reuse vectors to exploit, and their order, affects the final simplification.

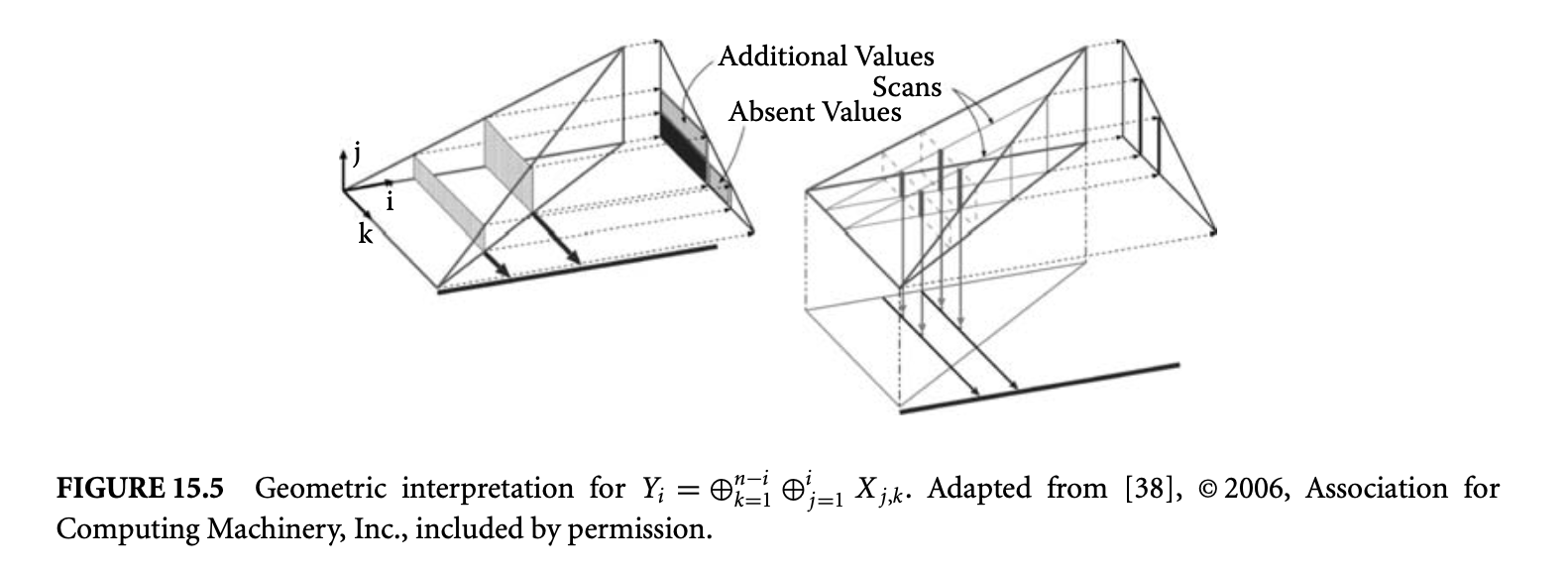

15.2.4.3 Decomposition of Accumulation

Consider the equation for . The one-dimensional reuse space is along , and is the two-dimensional accumulation space. The set of points that contribute to the th result lie in an rectangle of the two-dimensional input array . Comparing successive rectangles, we see that as the width decreases from one to the other, the height increases (Figure 15.5). If the operator does not have an inverse, it seems that we may not be able to simplify this equation. This is not true: we can see that for each we have an independent scan. The inner reduction is just a family of scans, which can be done in quadratic time with initialized with . The outer reduction just accumulates columns of , which is also quadratic.

What we did in this example was to decompose the original reduction that was along the space into two reductions, the inner along the space yielding partial answers along the plane and the outer that combined these partial answers along the space. Although the default choice of the decomposition -- the innermost accumulation direction -- of the space worked for this example, in general this is not the case. It is possible that the optimal solution may require a nonobvious decomposition, for instance,along some diagonal. We encourage the reader to simplify7 the following equation:

Solution: The inner reduction would map all points for which , for a given constant , to the same partial answer.

15.2.4.4 Distributivity and Accumulation Decomposition

Returning to the simplification of Equation 15.7, we see that the methods presented so far do not apply, since the body of the summation, , has no reuse. The expression has a distinct value at each point in the three-dimensional space spanned by , , and . However, the expression is composed of two subexpressions, which individually have reuse and are combined with the multiplication operator that distributes over the reduction operator, addition.

We may be able to distribute a subexpression outside a reduction if it has a constant value at all the points that map to the same answer. This was ensured by a change in basis of the three-dimensional space to , , and , followed by a decomposition to summations over and then . Values of were constant for different iterations of the accumulation over . After distribution, the body of the inner summation exhibited reuse that was exploited for the simplification of complexity.

15.2.5 Scheduling8

Adapted from [36] © 2007 IEEE, included by permission.

Scheduling is assigning an execution time to each computation so that precedence constraints are satisfied. It is one of the most important and widely studied problems. We present the scheduling analysis for programs in the -polyhedral model. The resultant schedule can be subsequently used to construct a space-time transformation leading to the generation of sequential or parallel code. The application of this schedule is made possible as a result of closure of the family of -polyhedral domains under image by the constructed transformation. We showed the advantages of scheduling programs in the -polyhedral model in Section 15.2.2. The general problem of scheduling programs with reductions is beyond the scope of this chapter. We will restrict our analysis to -polyhedral programs without reductions.

The steps involved in the scheduling analysis are (a) deriving precedence (causality) constraints for programs written in the -polyhedral model and (b) formulation of an integer linear program to obtain the schedule.

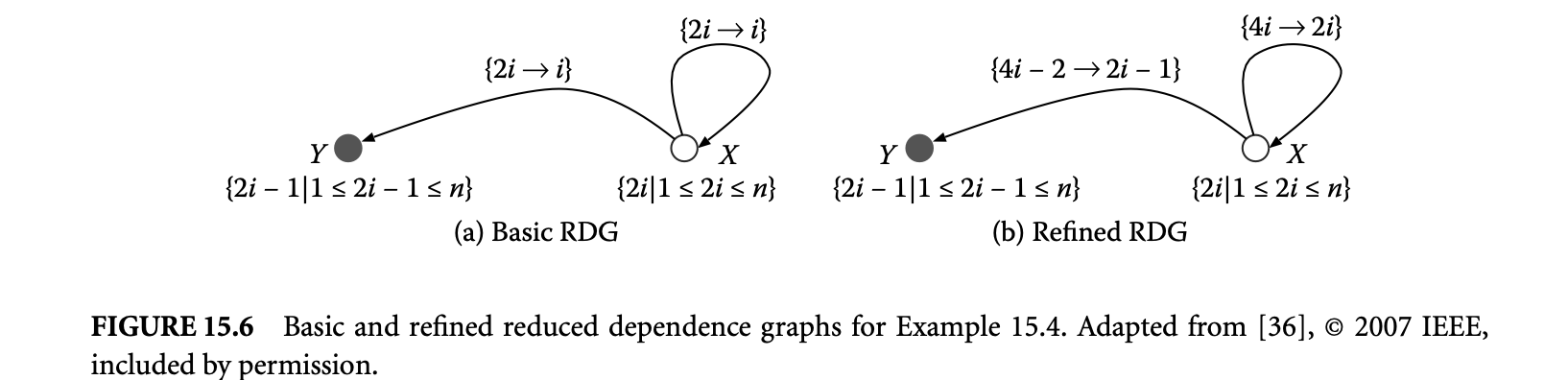

The precedence constraints between variables are derived from the reduced dependence graph (RDG). We will now provide some refinements of the RDG.

15.2.5.1 Basic and Refined RDG

Equations in the -polyhedral model can be defined over an infinite iteration domain. For any dependence analysis on an infinite graph, we need a compact representation. A directed multi-graph, the RDG precisely describes the dependences between iterations of variables. It is based on the normalized form of a specification and defined as follows:

- For every variable in the normalized specification, there is a vertex in the RDG labeled by the variable name and annotated by its domain. We will refer to vertices and variables interchangeably.

- For every dependence of the variable on , there is an edge from to , annotated by the corresponding dependence function. We will refer to edges and dependences interchangeably.

At a finer granularity, every branch of an equation in a normalized specification dictates the dependences between computations. A precise analysis requires that dependences be expressed separately for every branch. Again, for reasons of precision, we may express dependences of a variable separately for every -polyhedron in the -polyhedral domain of the corresponding branch of its equation. To enable these, we replace a variable by a set of new variables as elaborated below. Remember, our equations are of the form

Let be written as a disjoint union of -polyhedra given by . The variable in the domain is replaced by a new variable, for instance, . Similarly, let be replaced by new variables given as . The dependence of in on is replaced by dependences from all on all . An edge from to may be omitted if there are no iterations in that map to (mathematically, if the preimage of by the dependence function does not intersect with ). A naive construction following these rules results in the basic reduced dependence graph.

Let be written as a disjoint union of -polyhedra given by . The variable in the domain is replaced by a new variable, for instance, . Similarly, let be replaced by new variables given as . The dependence of in on is replaced by dependences from all on all . An edge from to may be omitted if there are no iterations in that map to (mathematically, if the preimage of by the dependence function does not intersect with ). A naive construction following these rules results in the basic reduced dependence graph.

Figure 15.6a gives the basic RDG for Equation 15.4, which is repeated here for convenience.

The domains of and the constant are and , respectively. Next, we will study a refinement on this RDG.

The domains of and the constant are and , respectively. Next, we will study a refinement on this RDG.

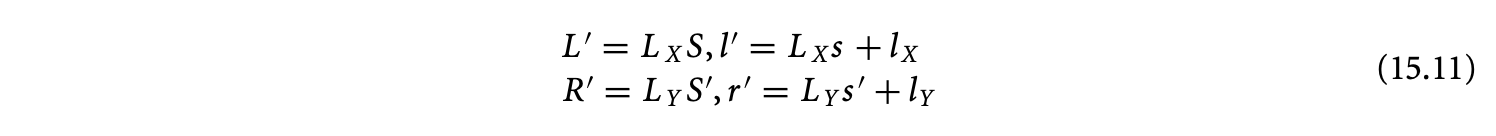

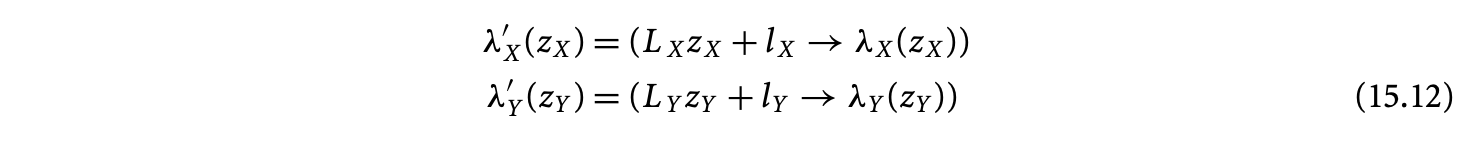

In the RDG for the generic equation given in Equation 15.10, let be a variable derived from and defined on , and let be a variable derived from defined on , where and are given as follows:

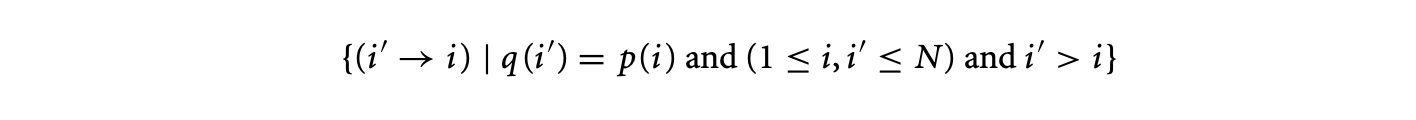

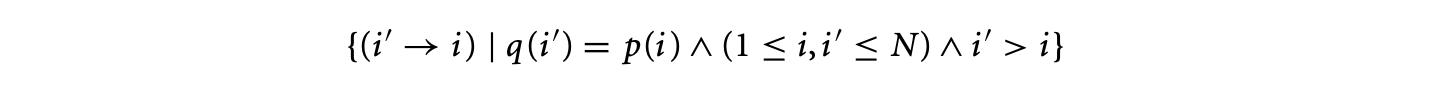

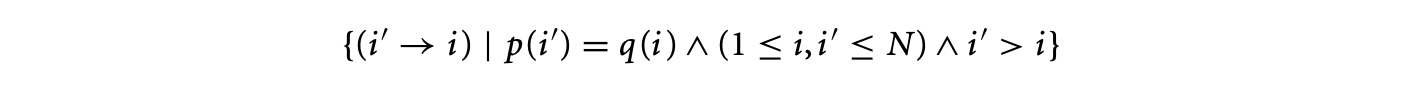

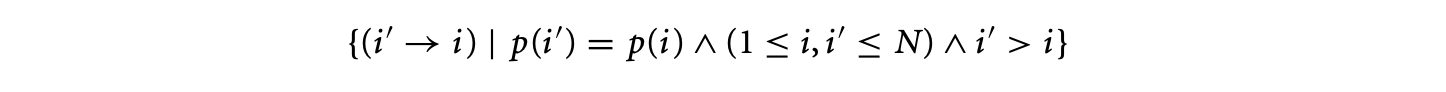

A dependence of the form is directed from to . at cannot be evaluated before at . The affine lattice may contain points that do not lie in the affine lattice . Similarly, the affine lattice may contain points that do not lie in the affine lattice . As a result, the dependence may be specified on a finer lattice than necessary and may safely be replaced by a dependence of the form , where

where and are matrices and and are integer vectors. The refined RDG is a refinement of the basic RDG where every dependence has been replaced by a dependence satisfying Equation 15.11. Figure 15.6b gives the refined RDG for Equation 15.4.

where and are matrices and and are integer vectors. The refined RDG is a refinement of the basic RDG where every dependence has been replaced by a dependence satisfying Equation 15.11. Figure 15.6b gives the refined RDG for Equation 15.4.

15.2.5.2 Causality Constraints

Dependences between the different iterations of variables impose an ordering on their evaluation. A valid schedule of the evaluation of these iterations is the assignment of an execution time to each computation so that precedence (causality) constraints are satisfied.

Let and be two variables in the refined RDG defined on and , respectively. We seek to find schedules on and of the following form:

where and are affine functions on and , respectively. Our motivation for such schedules is that all vectors and matrices are composed of integer scalars. If we seek schedules of the form , where is an affine function and is an iteration in the domain of a variable, then we may potentially assign execution times to "holes," or computations that do not exist.

where and are affine functions on and , respectively. Our motivation for such schedules is that all vectors and matrices are composed of integer scalars. If we seek schedules of the form , where is an affine function and is an iteration in the domain of a variable, then we may potentially assign execution times to "holes," or computations that do not exist.

We will now formulate causality constraints using the refined RDG. Consider dependences from to . All such dependences can be written as

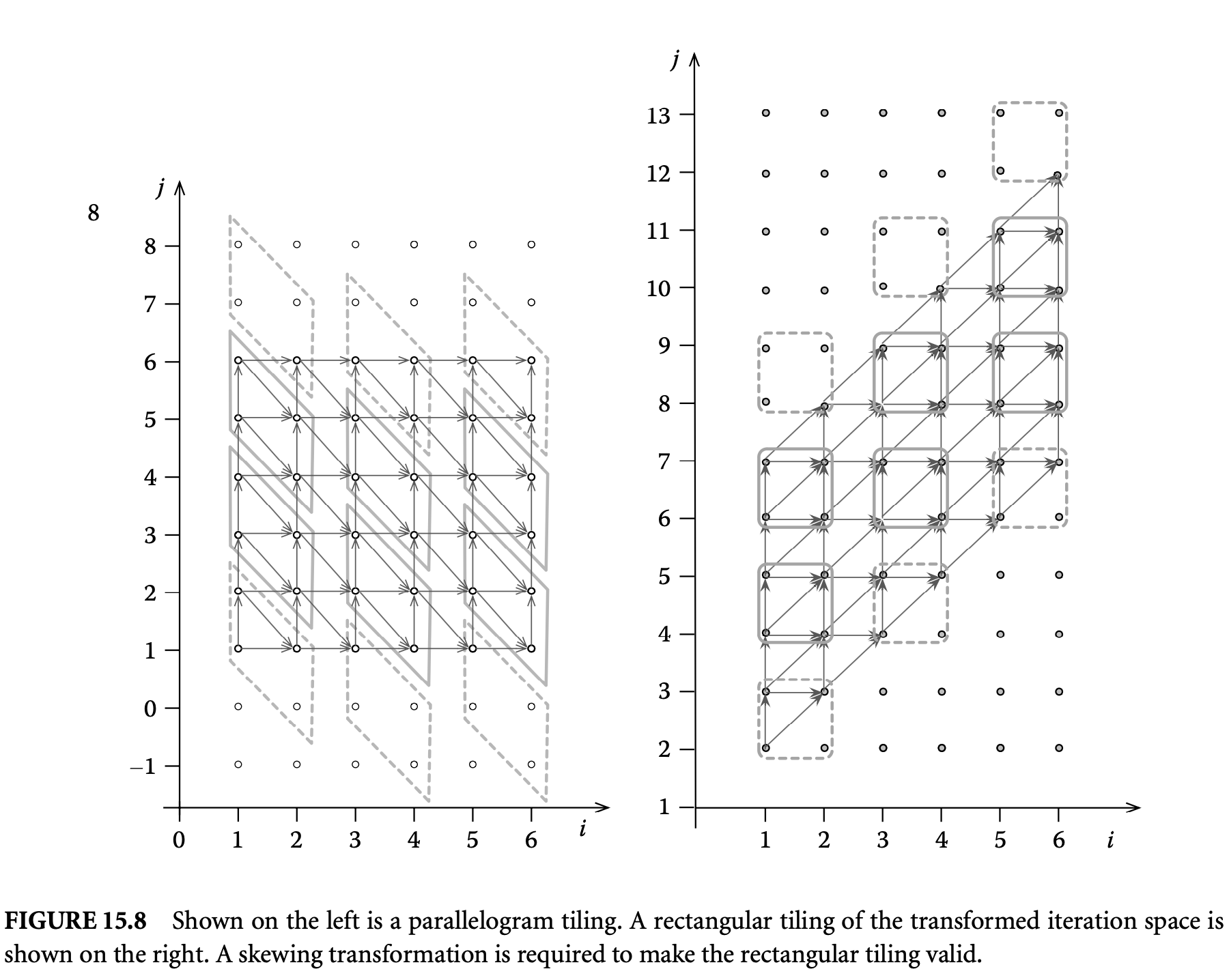

where and are matrices and and are vectors. The execution time for at should precede the execution time for at . With the nature of the schedules presented in Equation 15.12, our causality constraint becomes

where and are matrices and and are vectors. The execution time for at should precede the execution time for at . With the nature of the schedules presented in Equation 15.12, our causality constraint becomes

with the assumption that op is atomic and takes a single time step to evaluate.

with the assumption that op is atomic and takes a single time step to evaluate.

From these constraints, we may derive an integer linear program to obtain schedules of the form , where is the lattice corresponding to the -polyhedron and is the affine function (composed of integer scalars) on the coordinates of this lattice. An important feature of this formulation is that the resultant schedule can then be used to construct a space-time transformation.

15.2.6 Backend

After optimization of the equational specification and obtaining a schedule, the following steps are performed to generate (parallel) imperative code.

Analogous to the schedule that assigns a date to every operation, a second key aspect of the parallelization is to assign a processor to each operation. This is done by means of a processor allocation function. As with schedules, we confine ourselves to affine lattice functions. Since there are no causality constraints for choosing an allocation function, there is considerable freedom in choosing it. However, in the search for processor allocation functions, we need to ensure that two iteration points that are scheduled at the same time are not mapped to the same processing element.

The final key aspect in the static analysis of our equations is the allocation of operations to memory locations. As with the schedule and processor allocation function, the memory allocation is also an affine lattice function. The memory allocation function is, in general, a many-to-one mapping with most values overwritten as the computation proceeds. The validity condition for memory allocation functions is that no value is overwritten before all the computations that depend on it are themselves executed.

Once we have the three sets of functions, namely, schedule, processor allocation, and memory allocation, we are left with the problem of code generation. Given the above three functions, how do we produce parallel code that "implements" these choices? Code generation may produce either sequential or parallel code for programmable processors, or even descriptions of application-specific or nonprogrammable hardware (in appropriate hardware description language) that implements the computation specified by the equation.

Current techniques in code generation produce extremely efficient implementations comparable to hand-optimized imperative programs. With this knowledge, we return to our motivation for the use of equations to specify computations. An imperative loop nest that corresponds to an equation contains more information than required to specify the computation. There is an order (corresponding to the schedule) in the evaluation of values of a variable at different iteration points, namely, the lexicographic order of the loop indices. A loop nest also specifies the order of evaluation of the partial results of accumulations. A memory mapping has been chosen to associate values to memory locations. Finally, in the case of parallel code, a loop nest also specifies a processor allocation. Any analysis or transformation of loop nests that is equivalent to analysis or transformations on equations has to deconstruct these attributes and, thus, becomes unnecessarily complex.

15.2.7 Bibliographic Notes9

Parts of this section are adapted from [36] © 2007 IEEE and [37, 38], © 2007, 2006 Association for Computing Machinery, Inc., included by permission.

Our presentation of the equational language and the various analyses and transformations is based on the the ALPHA language [59, 69] and the MMALPHA framework for manipulating ALPHA programs, which relies on a library for manipulating polyhedra [107].

Although the presentation in this section has focused on equational specifications, the impact of the presented work is equally directed toward loop optimizations. In fact, many advances in the development of the polyhedral model were motivated by the loop parallelization and hardware synthesis communities.

To overcome the limitations of the polyhedral model in its requirement of dense iteration spaces, Teich and Thiele proposed LBLs [104]. -polyhedra were originally proposed by Ancourt [6]. Le Verge [60] argued for the extension of the polyhedral model to -polyhedral domains. Le Verge also developed normalization rules for programs with reductions [59].

The first work that proposed the extension to a language based on unions of -polyhedra was by Quinton and Van Dongen [81]. However, they did not have a unique canonic representation. Also, they could not establish the equivalence between identical -polyhedra nor provide the difference of two -polyhedra or their image under affine functions. Closure of unions of -polyhedra under all the required domain operations was proved in [37] as a result of a novel representation for -polyhedra and the associated family of dependences. One of the consequences of their results on closure was the equivalence of the family of -polyhedral domains and unions of LBLs.

Liu et al. [67] described how incrementalization can be used to optimize polyhedral loop computations involving reductions, possibly improving asymptotic complexity. However, they did not have a cost model and, therefore, could not claim optimality. They exploited reuse only along the indices of the accumulation loops and would not be able to simplify an equation like . Other limitations were the requirement of an inverse operator. Also, they did not consider reduction decompositions and algebraic transformations and do not handle the case when there is reuse of values that contribute to the same answer. These limitations were resolved in [38], which presented a precise characterization of the complexity of equations in the polyhedral model and an algorithm for the automatic and optimal application of program simplifications.

The scheduling problem on recurrence equations with uniform (constant-sized) dependences was originally presented by Karp et al. [52]. A similar problem was posed by Lamport [56] for programs with uniform dependences. Shang and Fortes [97] and Lisper [66] presented optimal linear schedules for uniform dependence algorithms. Rao [87] first presented affine by variable schedules for uniform dependences (Darte et al. [21] showed that these results could have been interpreted from [52]). The first result of scheduling programs with affine dependences was solved by Rajopadhye et al. [83] and independently by Quinton and Van Dongen [82]. These results were generalized to variable dependent schedules by Mauras et al. [70]. Feautrier [31] and Darte and Robert [23] independently presented the optimal solution to the affine scheduling problem (by variable). Feautrier also provided the extension to multidimensional time [32]. The extension of these techniques to programs in the -polyhedral model was presented in [36]. Their problem formulation searched for schedules that could directly be used to perform appropriate program transformations. The problem of scheduling reductions was initially solved by Redon and Feautrier [90]. They had assumed a Concurrent Read, Concurrent Write Parallel Random Access Machine (CRCW PRAM) such that each reduction took constant time. The problem of scheduling reductions on a Concurrent Read, Exclusive Write (CREW) PRAM was presented in [39]. The scheduling problem was studied along with the objective for minimizing communication by Lim et al. [63]. The problem of memory optimization, too, has been studied extensively [22, 26, 57, 64, 79, 105].

The generation of efficient imperative code for programs in the polyhedral model was presented by Quillere et al. [80] and later extended by Bastoul [9]. Algorithms to generate code, both sequential and parallel, after applying nonunimodular transformations to nested loop programs has been studied extensively [33, 62, 85, 111]. However, these results were all restricted to single, perfectly nested loop nests, with the same transformation applied to all the statements in the loop body. The code generation problem thus reduced to scanning the image, by a nonunimodular function, of a single polyhedron. The general problem of generating loop nests for a union of -polyhedra was solved by Bastoul in [11].

Lenders and Rajopadhye [58] proposed a technique for designing multirate VLSI arrays, which are regular arrays of processing elements, but where different registers are clocked at different rates. Their formulation was based on equations defined over -polyhedral domains.

Feautrier [30] showed that an important class of conventional imperative loop programs called affincontrol loops (ACLs) can be transformed to programs in the polyhedral model. Pugh [78] extended Feautrier's results. The detection of scans in imperative loop programs was presented by Redon and Feautrier in [89]. Bastoul et al. [10] showed that significant parts of the SpecFP and PerfectClub benchmarks are ACLs.

15.3 Iteration Space Tiling

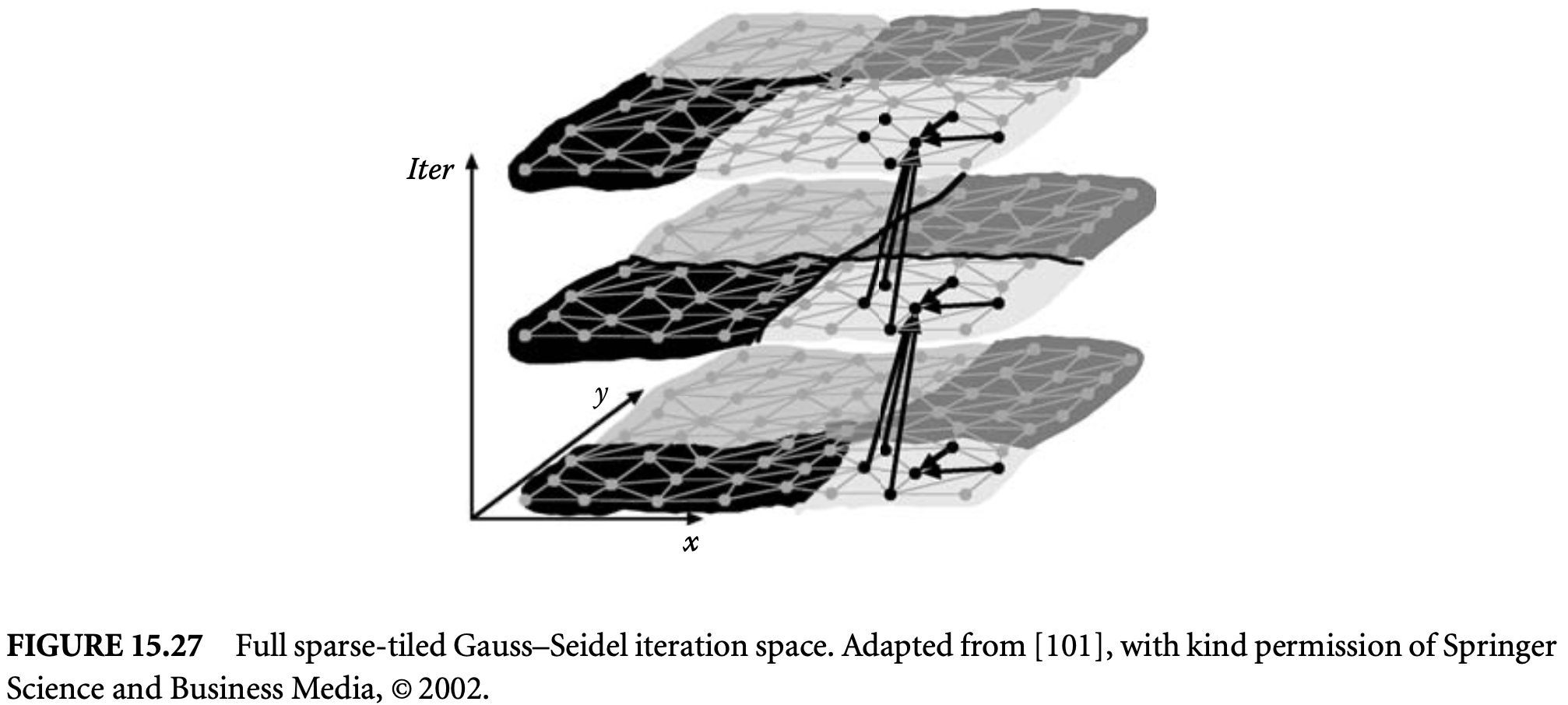

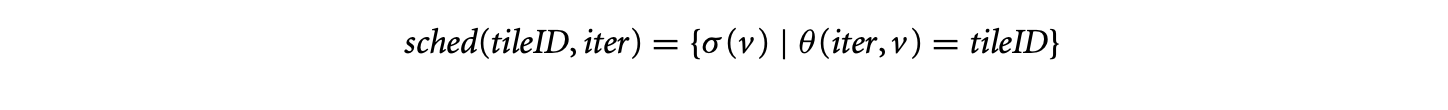

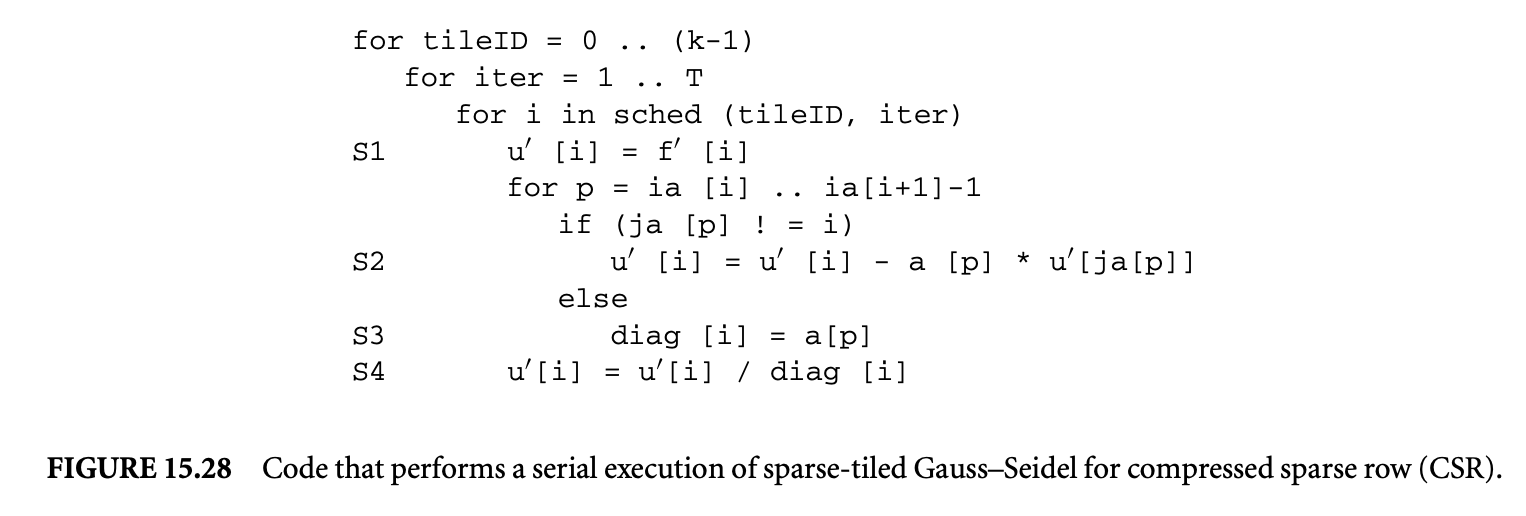

This section describes an important class of reordering transformations called tiling. Tiling is crucial to exploit locality on a single processor, as well as for adapting the granularity of a parallel program. We first describe tiling for dense iteration spaces and data sets and then consider irregular iteration spaces and sparse data sets. Next, we briefly summarize the steps involved in tiling and conclude with bibliographic notes.

15.3.1 Tiling for Dense Iteration Spaces

Tiling is a loop transformation used for adjusting the granularity of the computation so that its characteristics match those of the execution environment. Intuitively, tiling partitions the iterations of a loop into groups called tiles. The tile sizes determine the granularity.

In this section, we will study three aspects related to tiling. First, we will introduce tiling as a loop transformation and derive conditions under which it can be applied. Second, we present a constrained optimization approach for formulating and finding the optimal tile sizes. We then discuss techniques for generating tiled code.

15.3.1.1 Tiling as a Loop Transformation

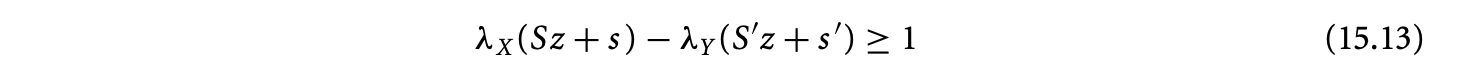

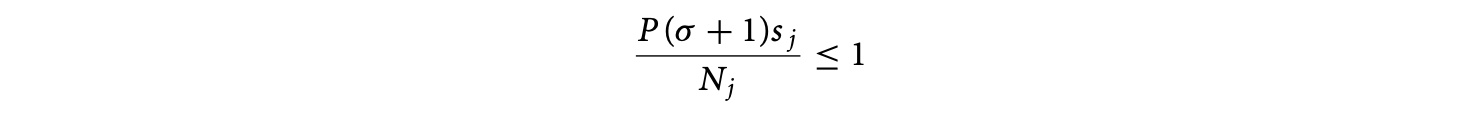

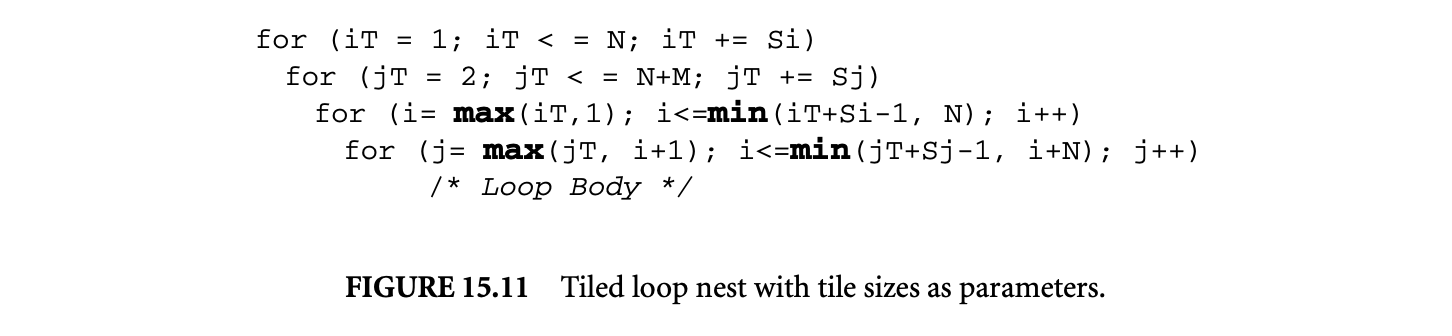

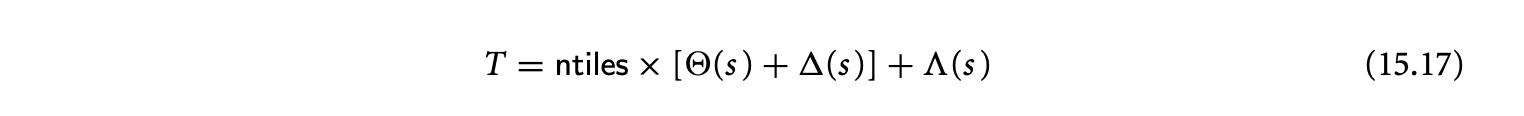

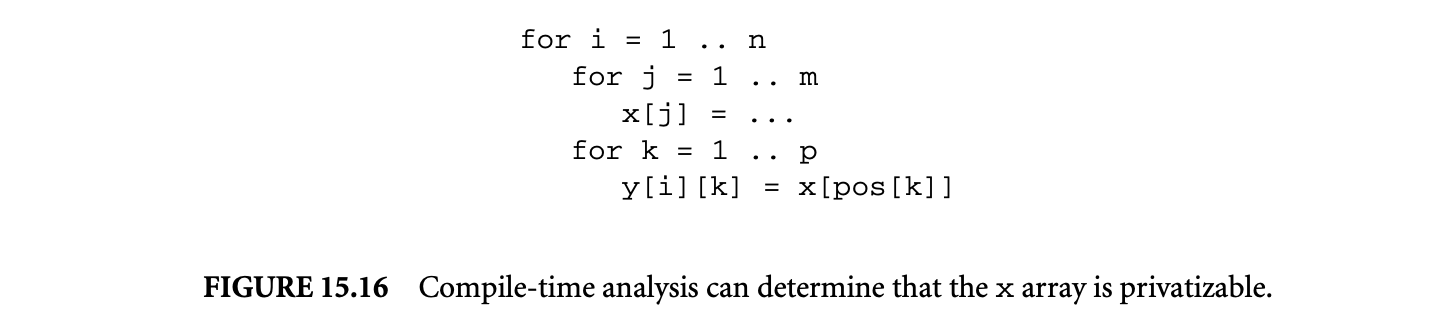

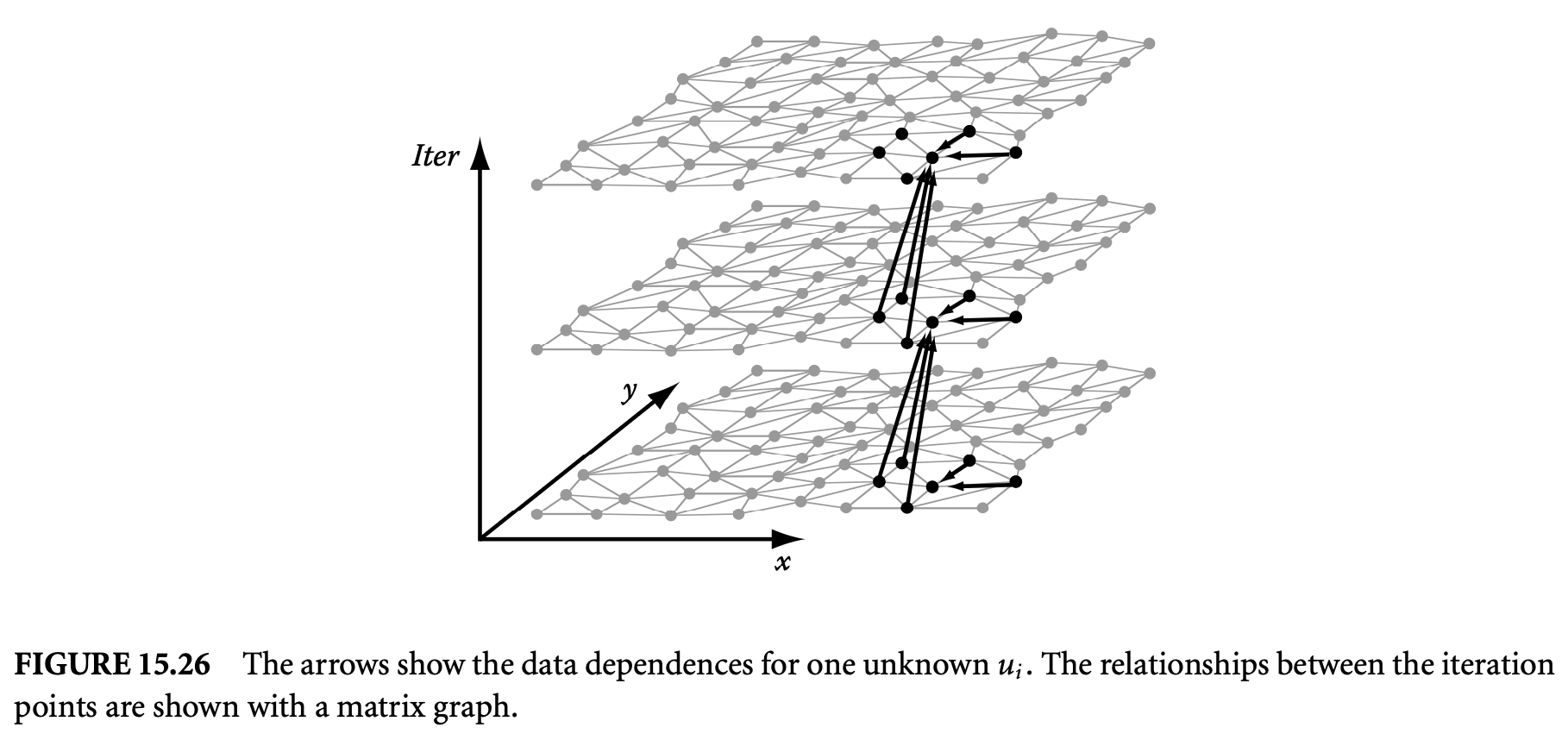

Stencil computations occur frequently in many numerical solvers, and we use them to illustrate the concepts and techniques related to tiling. Consider the typical Gauss-Seidel style stencil computation shown in Figure 15.7 as a running example. The loop specifies a particular order in which the values are computed. An iteration reordering transformation specifies a new order for computation. Obviously not every reordering of the iterations is legal, that is, semantics preserving. The notion of semantics preserving can be formalized using the concept of dependence. A dependence is a relation between a producer and consumer of a value. A dependence is said to be preserved after the reordering transformation if the iteration that produces a value is computed before the consumer iteration. Legal iteration reorderings are those that preserve all the dependences in a given computation.

Array data dependence analyses determine data dependences between values stored in arrays. The relationship can be either memory-based or value-based. Memory-based dependencies are induced by write to and read from the same memory location. Value-based dependencies are induced by production and consumption of values. Once can view memory-based dependences as a relation between memory locations and valued-based dependences as a relation between values produced and consumed. For computations represented by loop nests, the values produced and consumed can be uniquely associated with an iteration. Hence, dependences can be viewed as a relation between iterations.

Dependence analyses summarize these dependence relationships with a suitable representation. Different dependence representations can be used. Here, we introduce and use distance vectors that can represent a particular kind of dependence and discuss legality of tiling with respect to them. More general representations such as direction vectors, dependence polyhedra, and cones can be used to capture general dependence relationships. Legality of tiling transformations can be naturally extended to these representations, and a discussion of them is beyond the scope of this article.

We consider perfect loop nests. Since, through array expansion, memory-based dependences can be automatically transformed to value-based dependences, we consider only the later. For an -deep perfect loop nest, the iterations can be represented as integer -vectors.

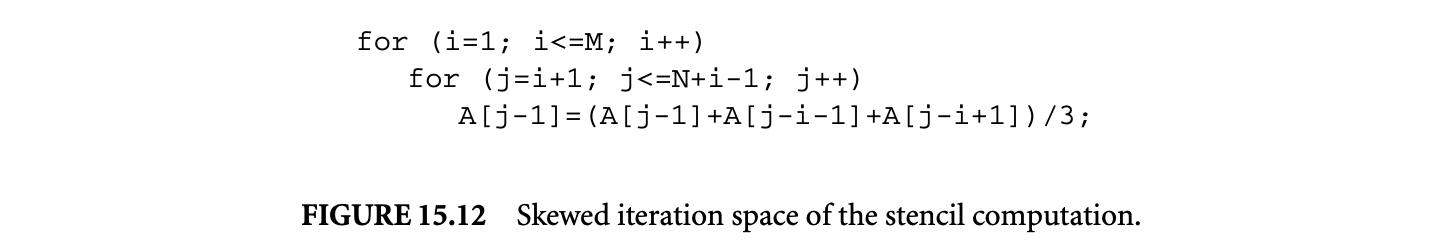

A dependence vector for an -dimensional perfect loop nest is an -vector , where the th component corresponds to the th loop (counted from outermost to innermost). A distance vector is a dependence vector , that is, every component is an integer. A dependence distance is the distance between the iteration that produces a value and the iteration that consumes a value. Distance vectors represent this information. The dependence distance vector for a value produced at iteration and consumed at a later iteration is . The stencil computation given in Figure 15.7 has three dependences. The values consumed at an iteration are produced at iterations , , and . The corresponding three dependence vectors are and .

A dependence vector for an -dimensional perfect loop nest is an -vector , where the th component corresponds to the th loop (counted from outermost to innermost). A distance vector is a dependence vector , that is, every component is an integer. A dependence distance is the distance between the iteration that produces a value and the iteration that consumes a value. Distance vectors represent this information. The dependence distance vector for a value produced at iteration and consumed at a later iteration is . The stencil computation given in Figure 15.7 has three dependences. The values consumed at an iteration are produced at iterations , , and . The corresponding three dependence vectors are and .

Tiling is an iteration reordering transformation. Tiling reorders the iterations to be executed in a block-by-block or tile-by-tile fashion. Consider the tiled iteration space shown in Figure 15.7 and the following execution order. Both the tiles and the points within a tile are executed in the lexicographic order. The tiles are also executed in an atomic fashion, that is, all the iterations in a tile are executed before any iteration of another tile. It is very instructive to pause for a moment and ask whether this tiled execution order preserves all the dependences of the original computation. One can observe that the dependence is not preserved, and hence the tiling is illegal. There exists a nice geometric way of checking the legality of a tiling. A given tiling is illegal if there exist cyclic dependences between tiles. An instance of this cyclic dependence is highlighted in Figure 15.7.

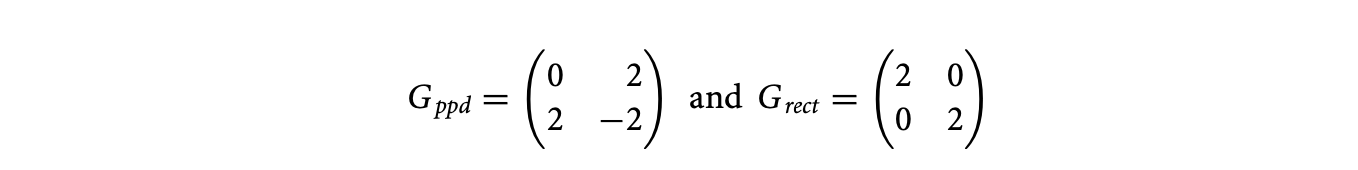

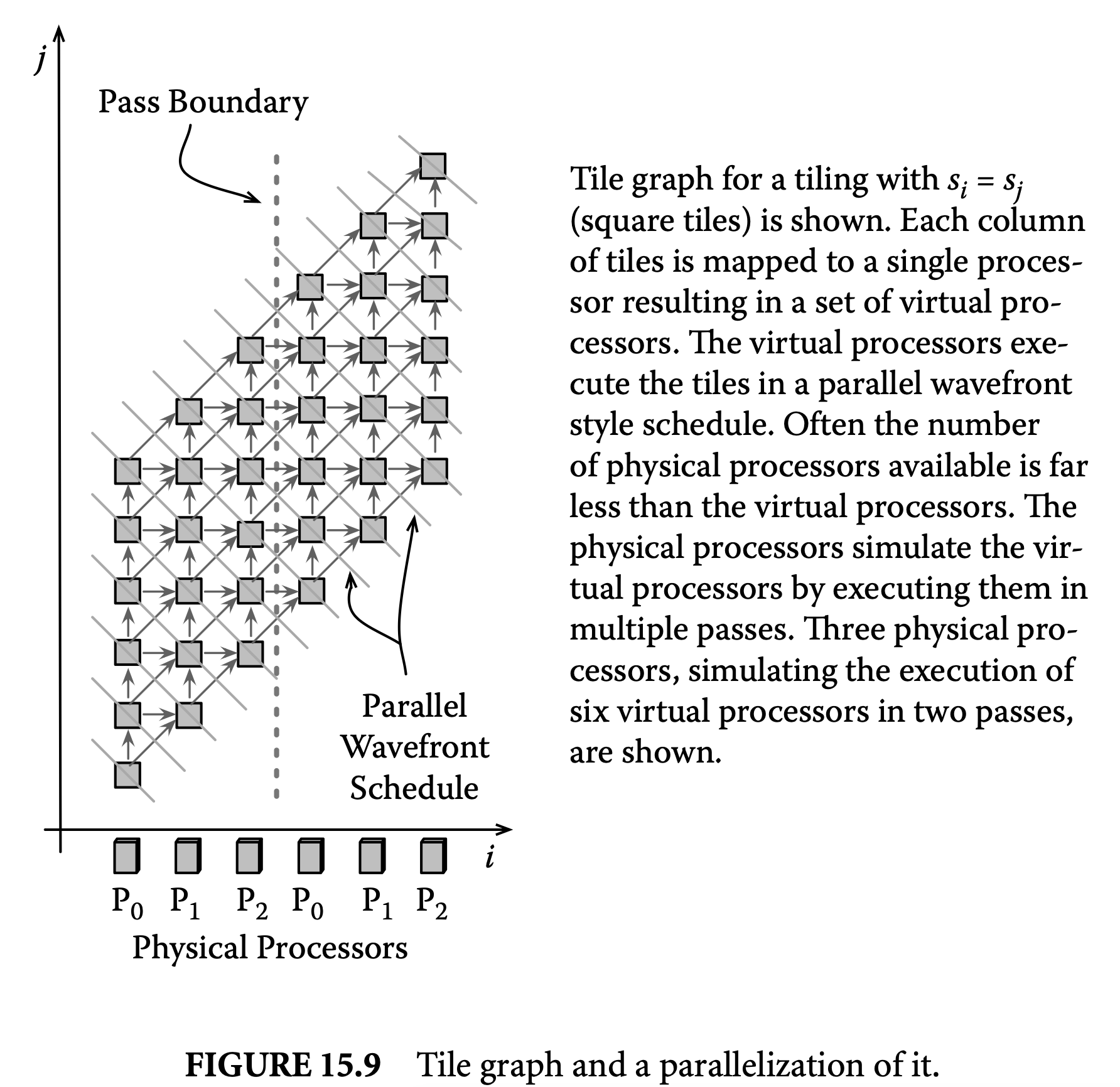

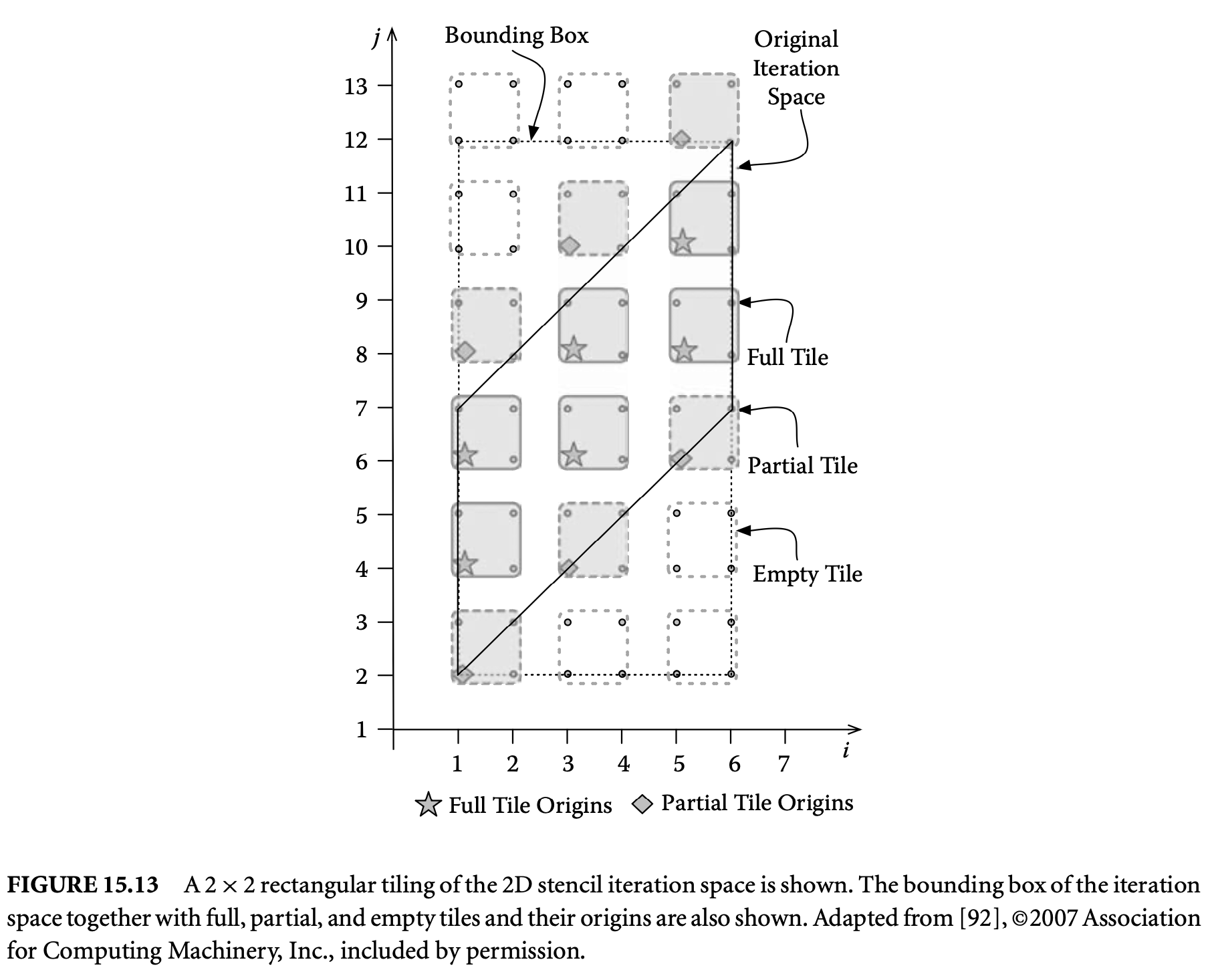

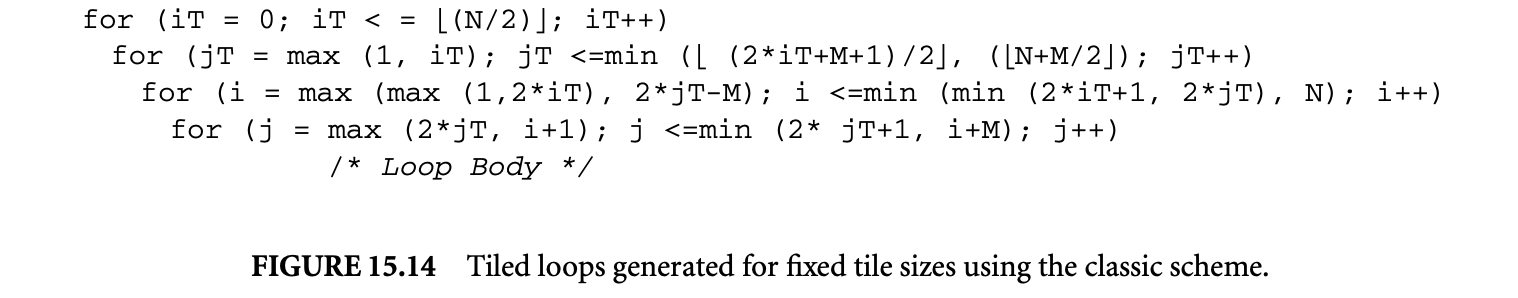

The legality of tiling is determined not by the dependences alone, but also by the shape of the tiles.10 We saw (Figure 15.7) that tiling the stencil computation with rectangles is illegal. However, one might wonder whether there are other tile shapes for which tiling is legal. Yes, tiling with parallelograms is legal as shown in Figure 15.8. Note how the change in the tile shape has avoided the cyclic dependences that were present in the rectangular tiling. Instead of considering nonrectangular shapes that make tiling legal, one could also consider transforming the data dependences so that rectangular tiling becomes legal. Often, one can easily find such transformations. A commonly used transformation is skewing. The skewed iteration space is shown in Figure 15.8 together with a rectangular tiling. Compare the dependences between tiles in this tiling with those in the illegal rectangular tiling shown in Figure 15.7. One could also think of more complicated tile shapes, such as hexagons or octagons. Because of complexity of tiled code generation such tile shapes are not used.

Legality of tiling also depends on the shape of the iteration space. However, for practical applications, we can check the legality with the shape of the tiles and dependence information alone.

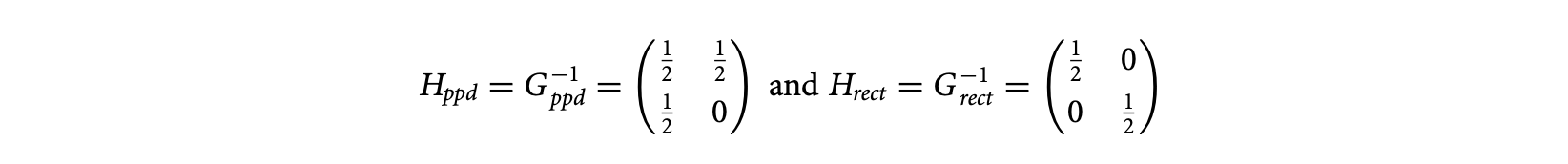

A given tiling can be characterized by the shape and size of the tiles, both of which can be concisely specified with a matrix. Two matrices, clustering and tiling, are used to characterize a given tiling. The clustering matrix has a straightforward geometric interpretation, and the tiling matrix is its inverse and is useful in defining legality conditions. A parallelogram (or a rectangle) has four vertices and four edges. Let us pick one of the vertices to be the origin. Now we have two edges or two vertices adjoining the origin. The shape and size of the tiles can be specified by characterizing these edges or vertices. We can easily generalize these concepts to higher dimensions. In general, an -dimensional parallelepiped has vertices and facets (higher-dimensional edges), out of which facets and vertices adjoin the origin. A clustering matrix is an square matrix whose columns correspond to the facets that determine a tile. The clustering matrix has the property that the absolute value of its determinant is equal to the tile volume.

The clustering matrices of the parallelogram and rectangular tilings shown in Figure 15.8 are

the first column represents the horizontal edge, and the second represents the oblique edge. In , the first column represents the horizontal edge, and the second represents the vertical edge.

The tiling matrix is the inverse of the clustering matrix. The tiling matrices of the parallelogram and the rectangular tilings shown in Figure 15.8 are

For rectangular tiling the edges are always along the canonical axes, and hence, there is no loss of generality in assuming that the tiling and clustering matrices are diagonal. The tiling is completely described by just the so-called tile size vector, , where each denotes the tile size along the th dimension. The clustering and tiling matrices are simply and , where denotes the diagonal matrix with as the diagonal entries.

For rectangular tiling the edges are always along the canonical axes, and hence, there is no loss of generality in assuming that the tiling and clustering matrices are diagonal. The tiling is completely described by just the so-called tile size vector, , where each denotes the tile size along the th dimension. The clustering and tiling matrices are simply and , where denotes the diagonal matrix with as the diagonal entries.

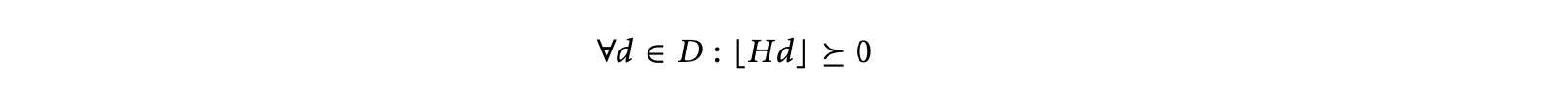

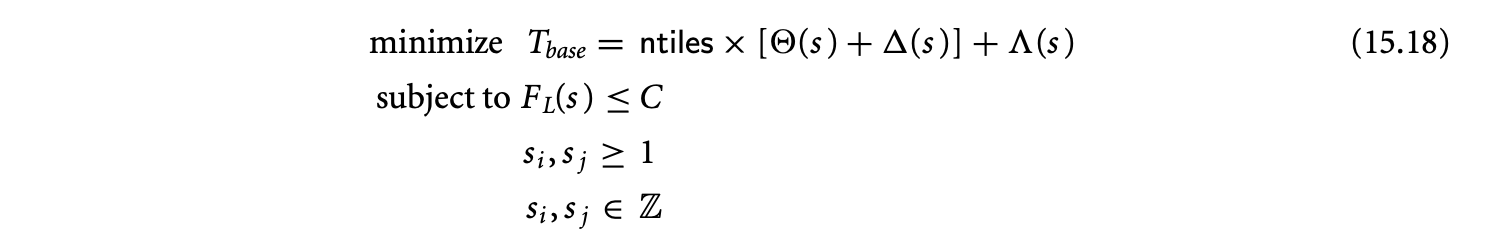

A geometric way of checking the legality of a given tiling was discussed earlier. One can derive formal legality conditions based on the shape and size of the tiles and the dependences. Let be the set of dependence distance vectors. A vector is lexicographically nonnegative if the leading nonzero component of the is positive, that is, or both and . The floor operator when used on vectors is applied component-wise, that is, . The legality condition for a given (rectangular or parallelepiped) tiling specified by the tiling matrix and dependence set is

The above condition formally captures the presence or absence of cycles between tiles.

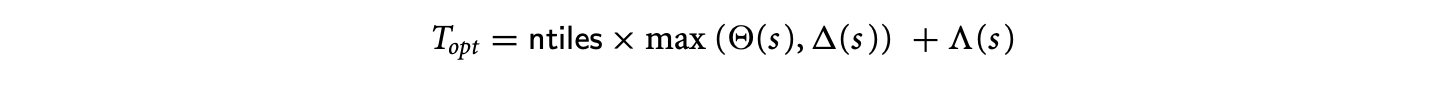

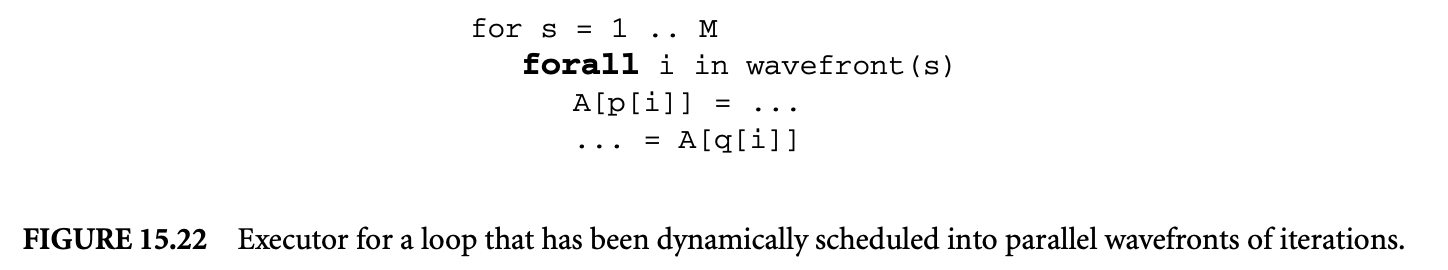

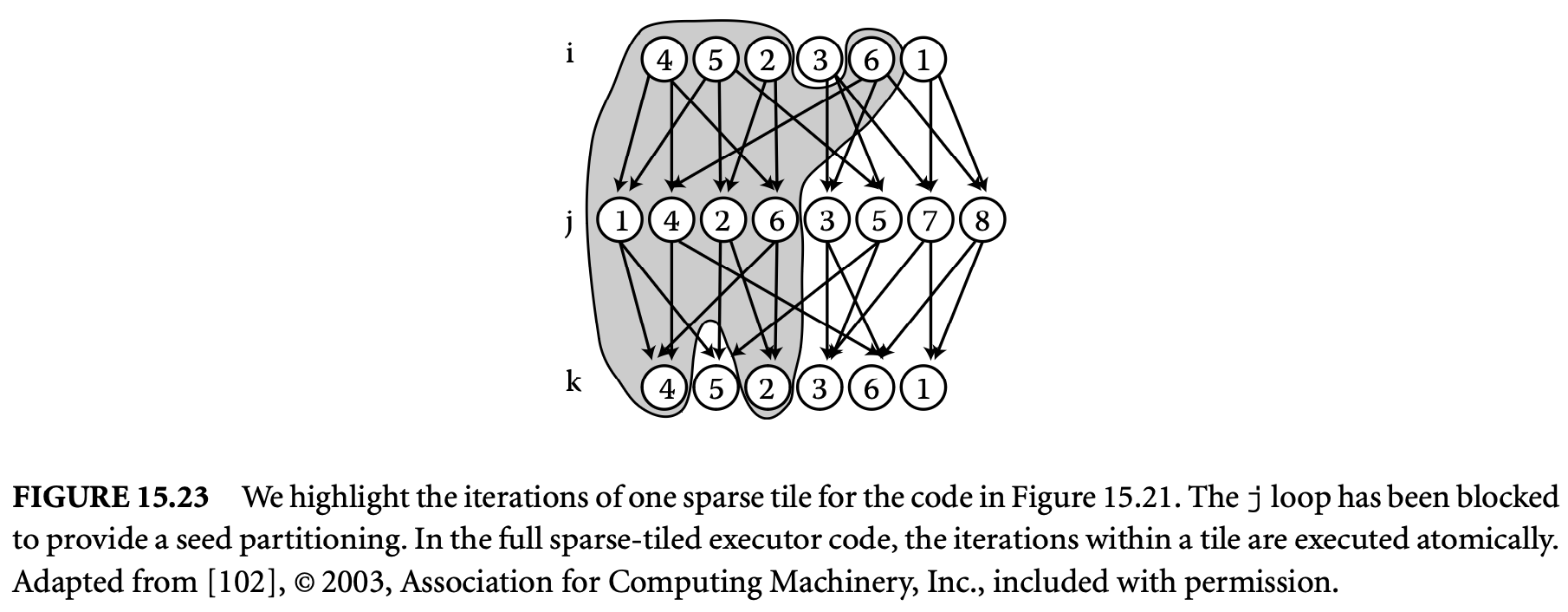

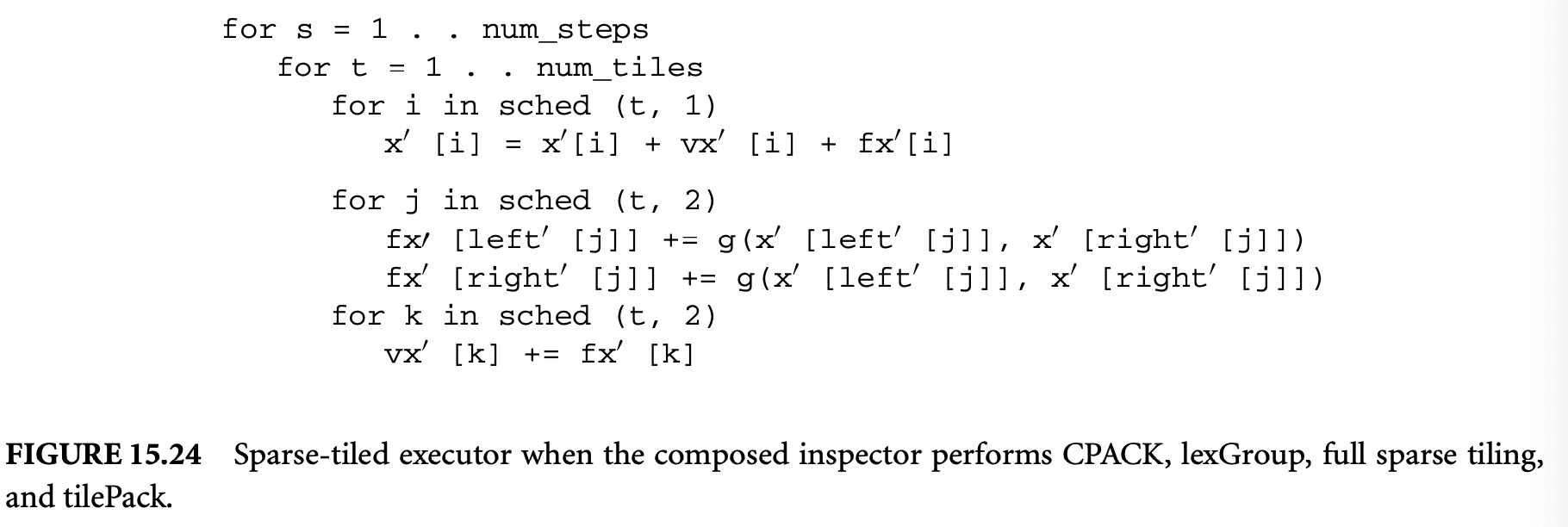

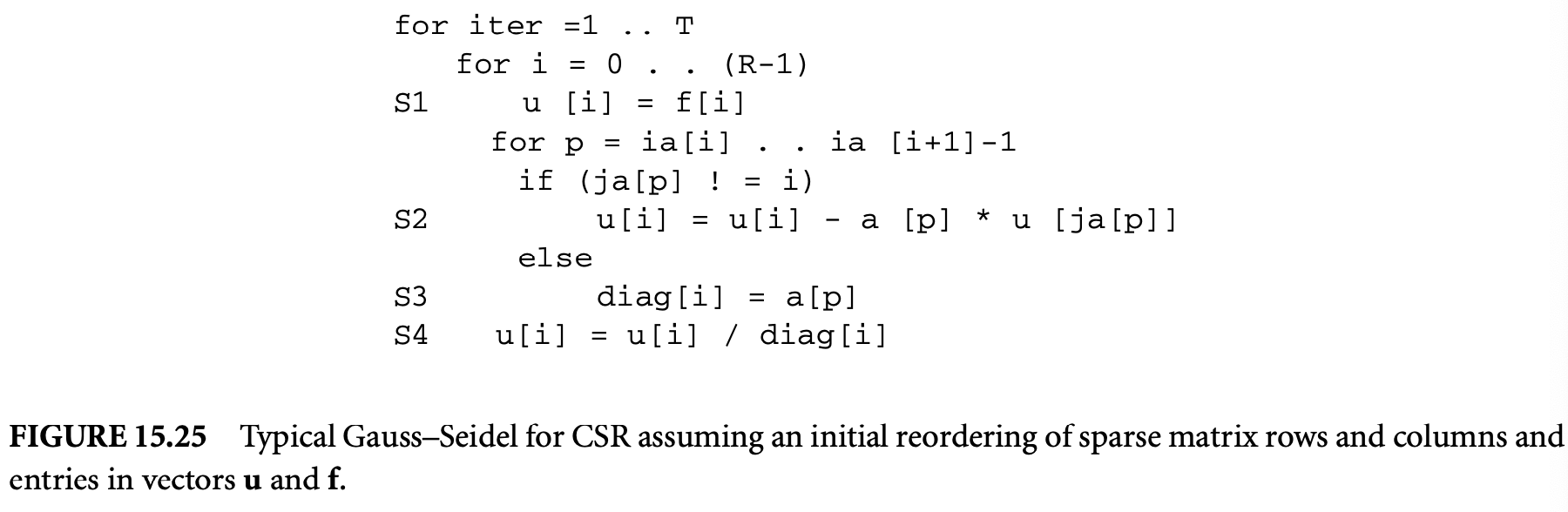

The above condition formally captures the presence or absence of cycles between tiles.